Project Updates

-

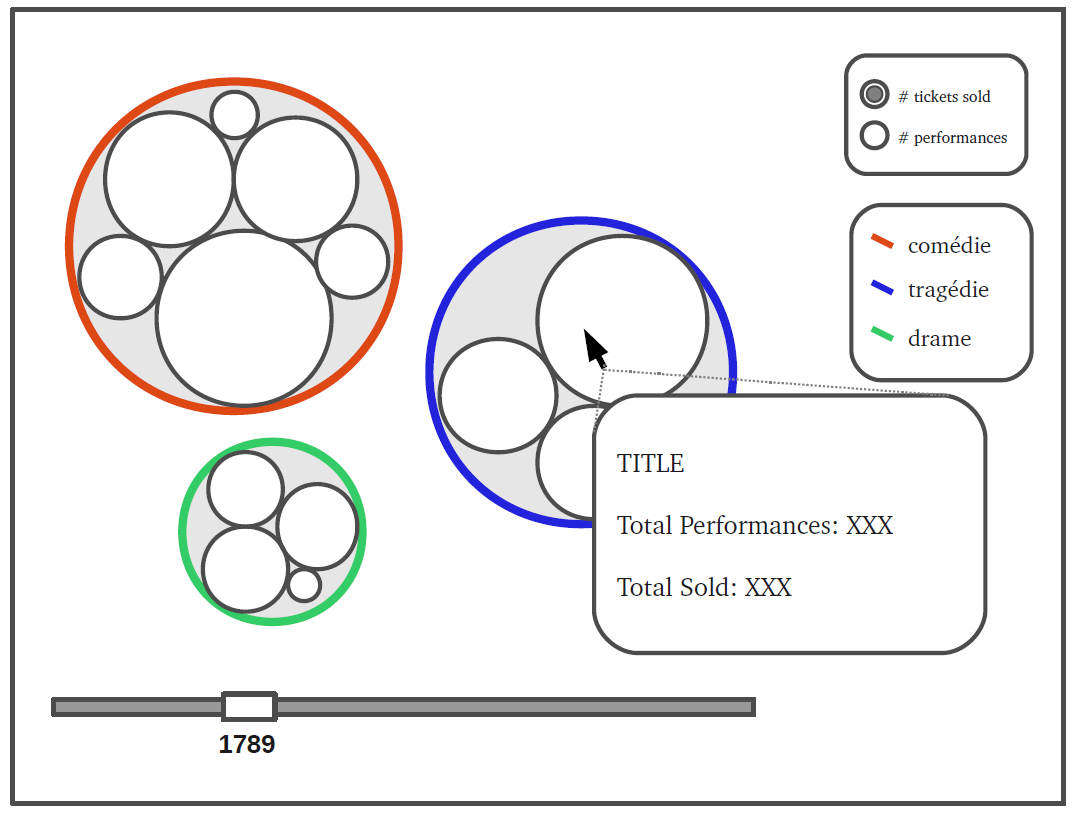

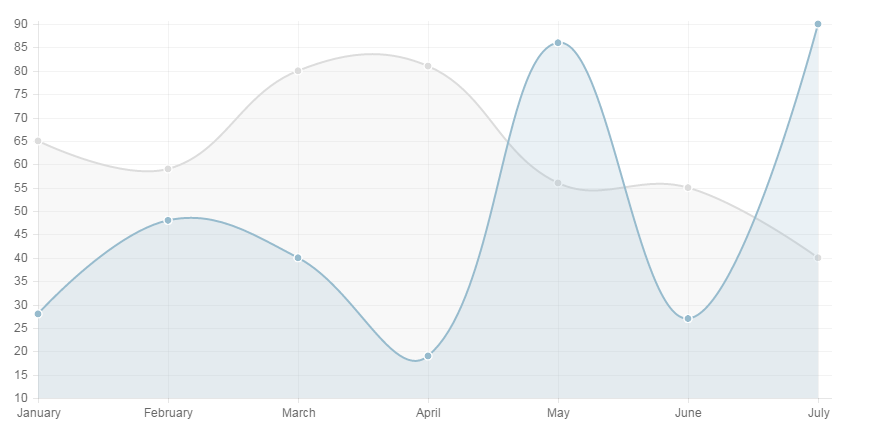

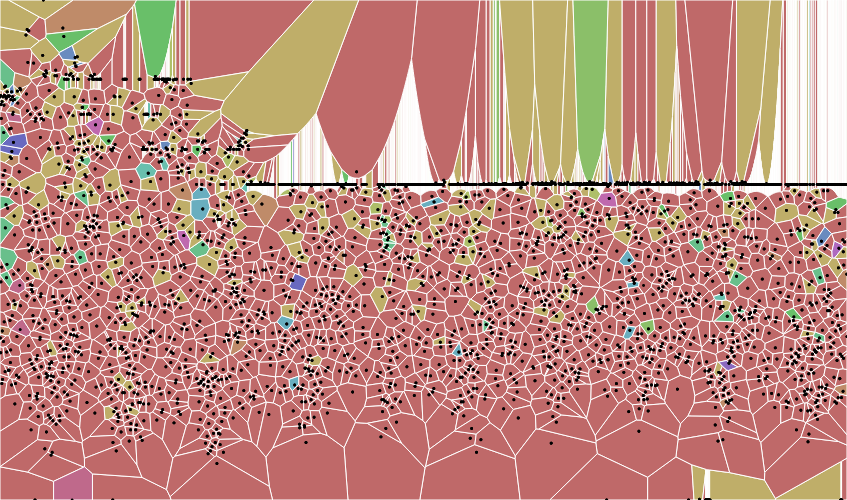

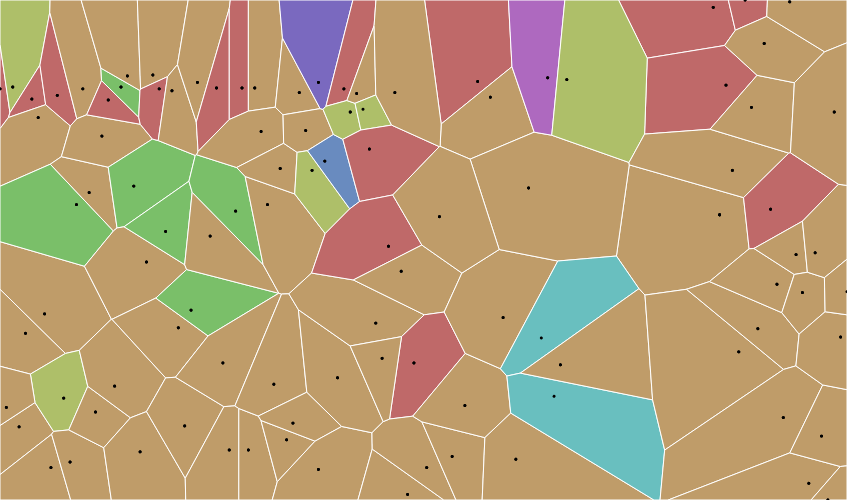

vr-mfa design document and presentation

Here is the design document and the presentation.

-

Final Design Document

Design Document PDF Final Presentation

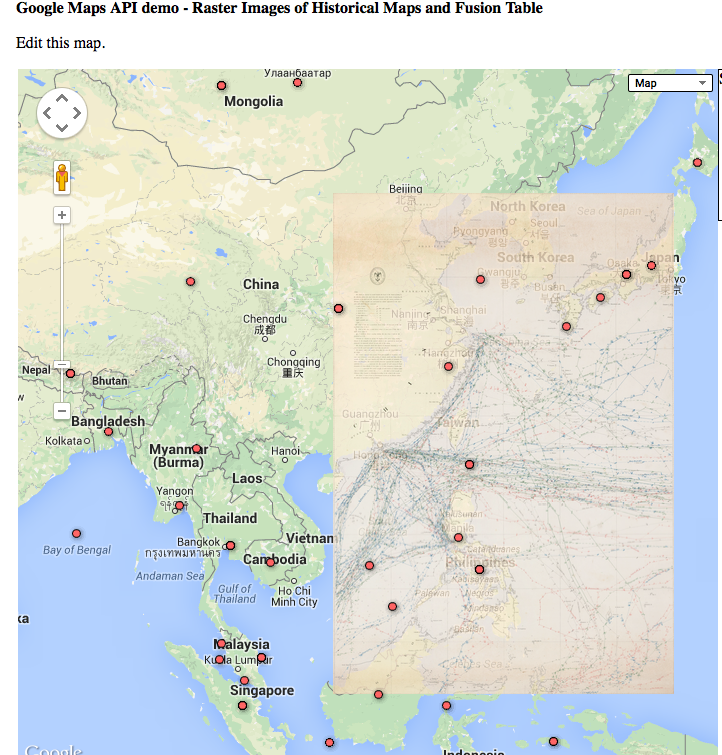

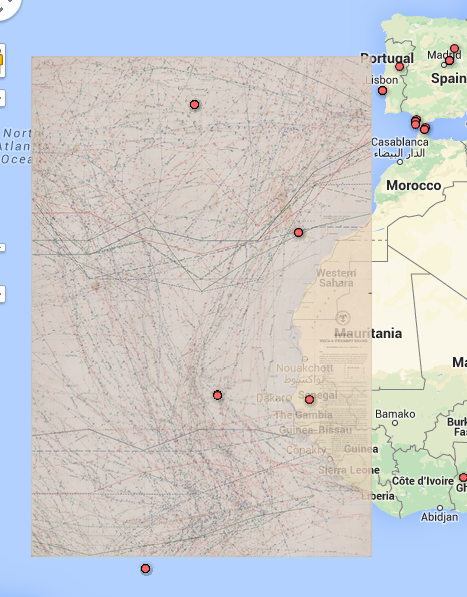

Live Demos, Please Don’t Modify Them (you can play them though) http://mediabitch.mit.edu:8000/#69e77f63-42bf-46ed-9902-b9e40e2028c4 http://mediabitch.mit.edu:8000/#ec7fe0c6-486b-457a-89fd-379690e94a8a

-

Annotating the Air

Here is our final paper

and our final presentation

-

NewsConnect Design Document Final Draft

I. Project name

II. Team members

Meghana Bhat is a sophomore in Course 6 at MIT. For the NewsConnect project, Meghana created the code that made the app a reality. She worked to incorporate news data with our map, and transferred UI ideas into code.

Karleigh Moore is a junior in CMS at MIT. For the NewsConnect project, Karleigh initially worked on the news data scraper, but then focused on deciding what sorts of news data (keywords, sources, places) we would look for with a different scraper. She worked to brainstorm UI features, create mockups, and was in charge of creating the documentation and presentations regarding the project.

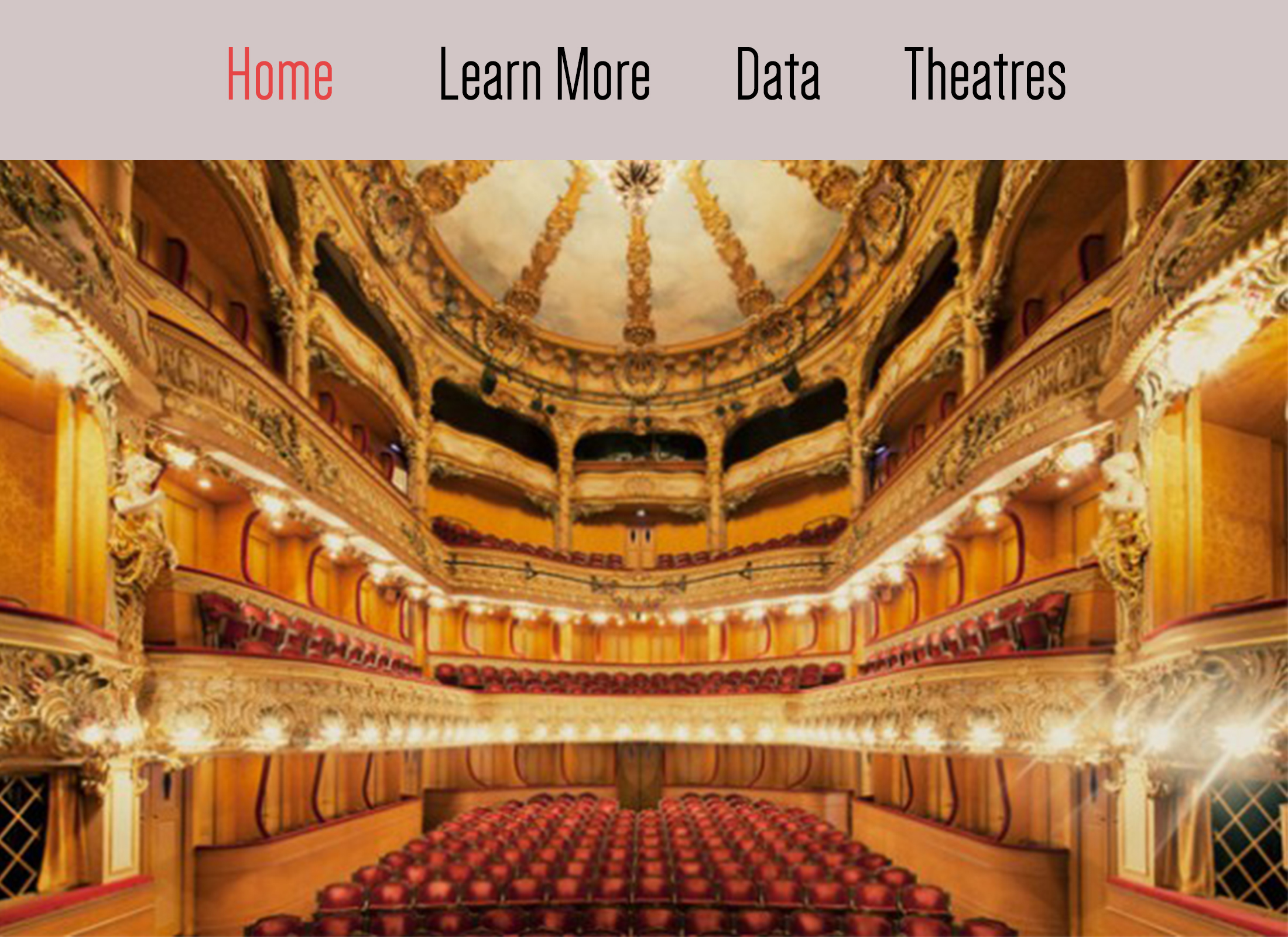

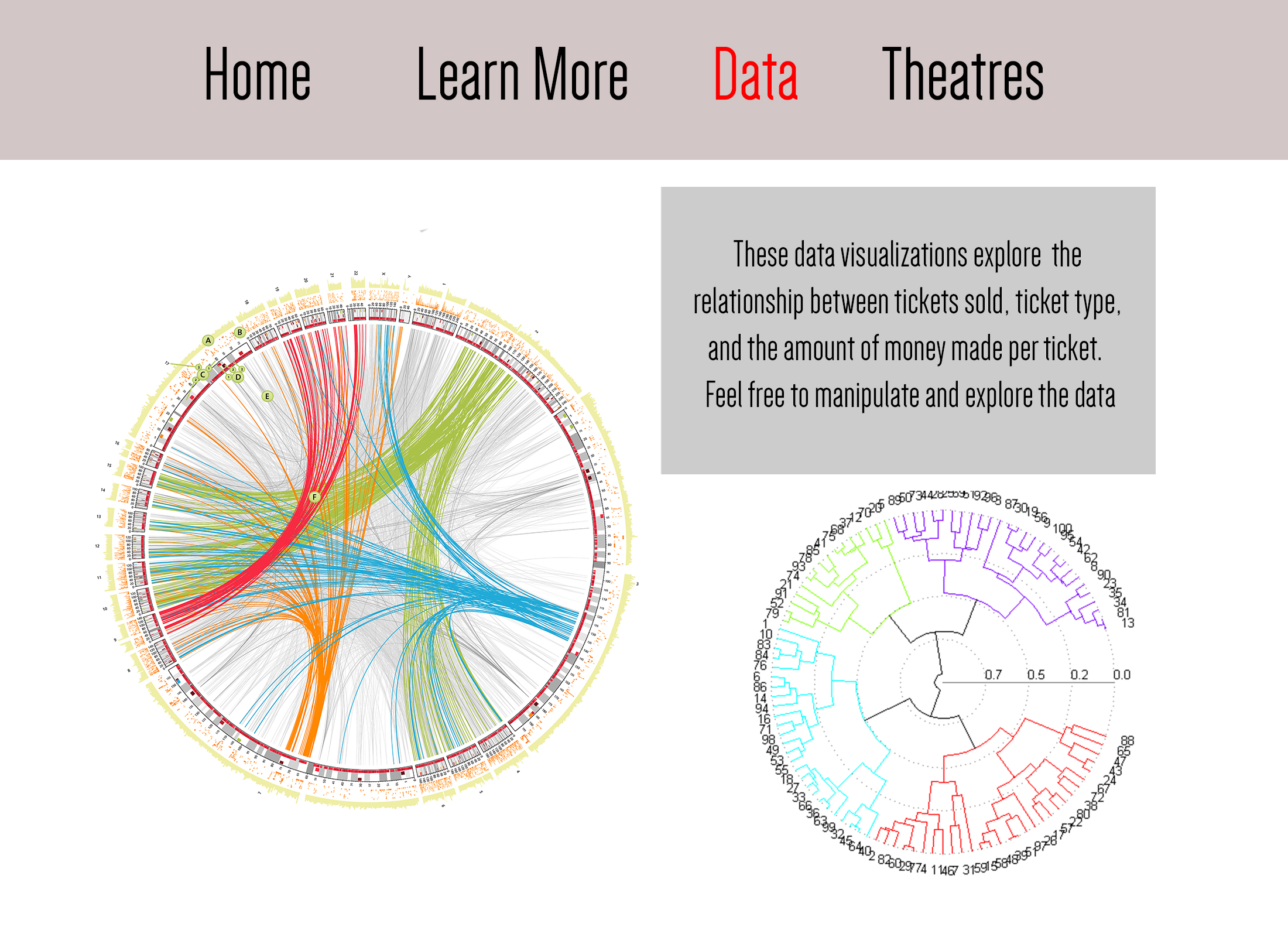

III. Concept overview/abstract

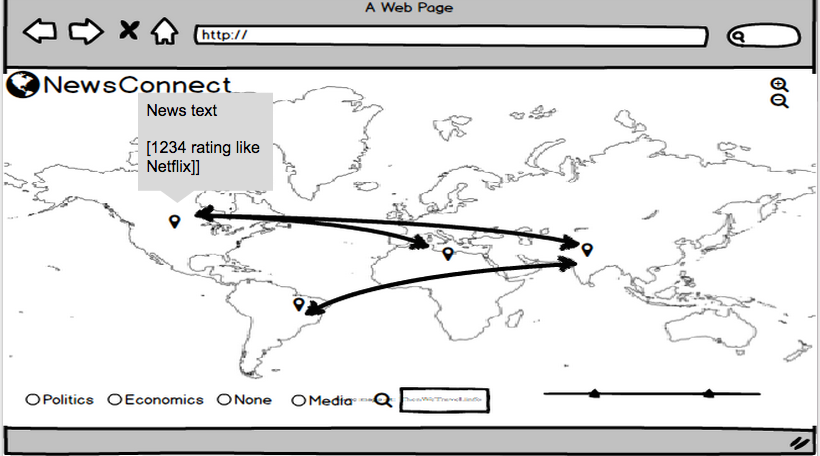

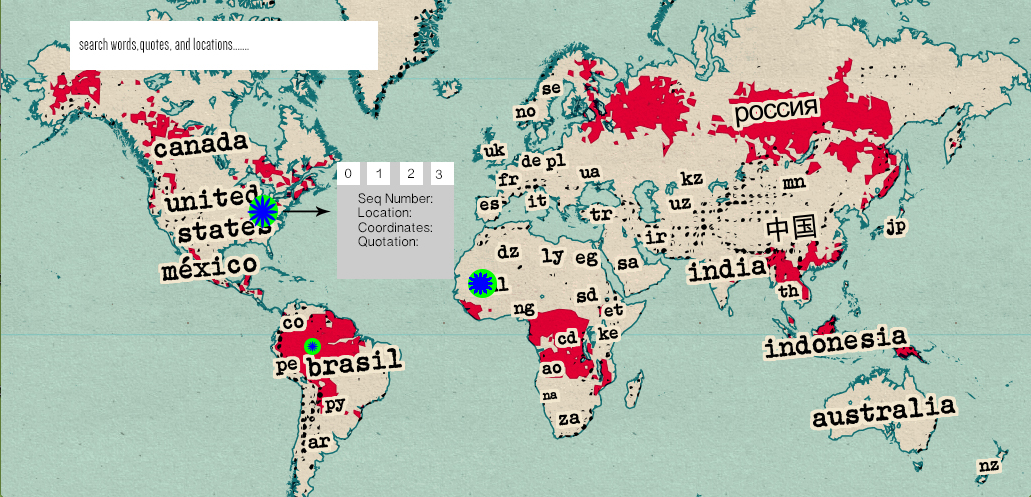

NewsConnect is a web application that allows users to see global connections in news, and makes it easy to find only the news you want to read.

Key features of NewsConnect include:

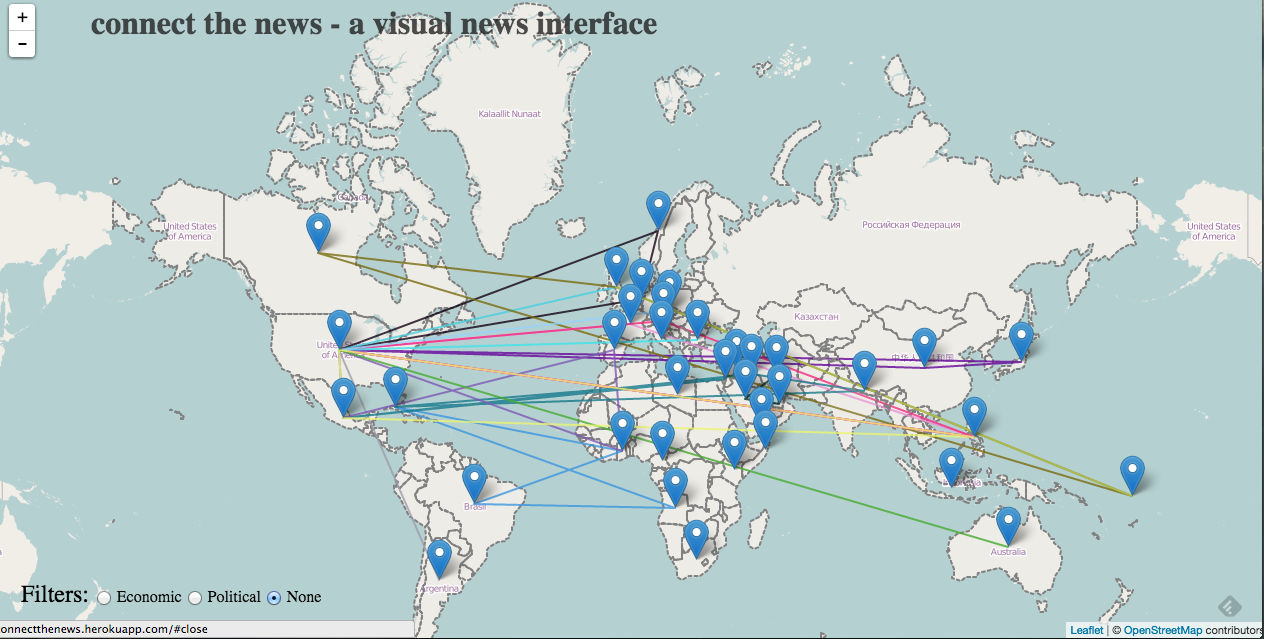

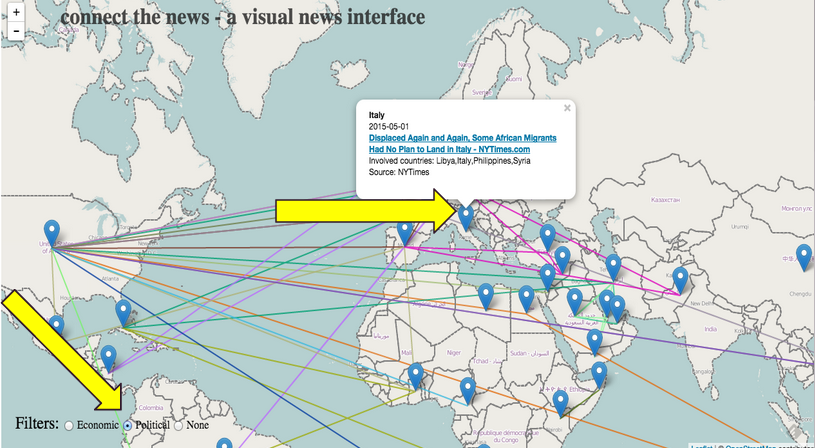

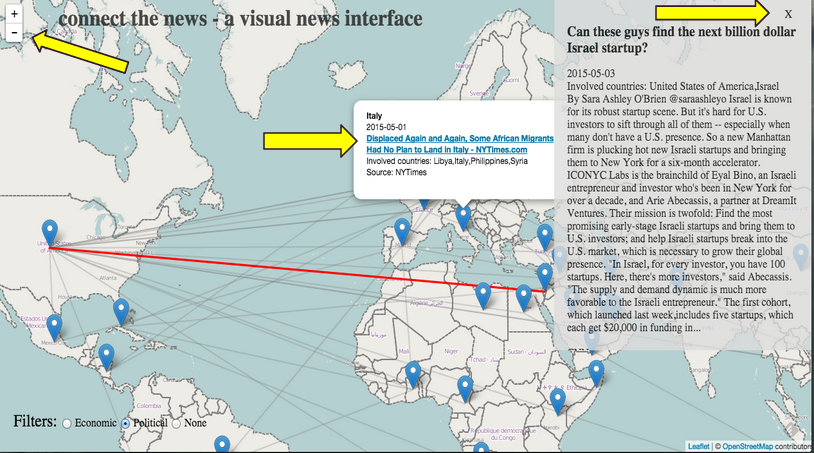

- Lines connecting points within nations (that represent an article). So if an article has to do with China and India, the two countries will have a connection line.

- Users can filter articles by category. This would make it easy, for example, to narrow down articles to only economics articles.

- Users can get a preview of an article by hovering over a map point.

- NewConnect will eventually include a timeline filter so that users can see articles in a particular time range, in addition to a specific category and/or country.

It’s very easy to find news articles to read on the internet, but it can be overwhelming to sift through so much text, and to find what you’re looking for beyond basic categories of “politics” and “economics”. In particular, with world news, it can be hard to track patterns of news across nations. A student might be doing a research project on international relations between, for example, China and European countries. He or she can look up news about China, or news about Europe, or news about the world, but seeing news connecting both locations, and seeing the development of that news over time, is significantly harder. A user might also, for example, have an interest in West African nations and articles relating to politics of the region. It would take a lot of effort to track news about the particular region related to one specific topic, especially if you want news from many sources.

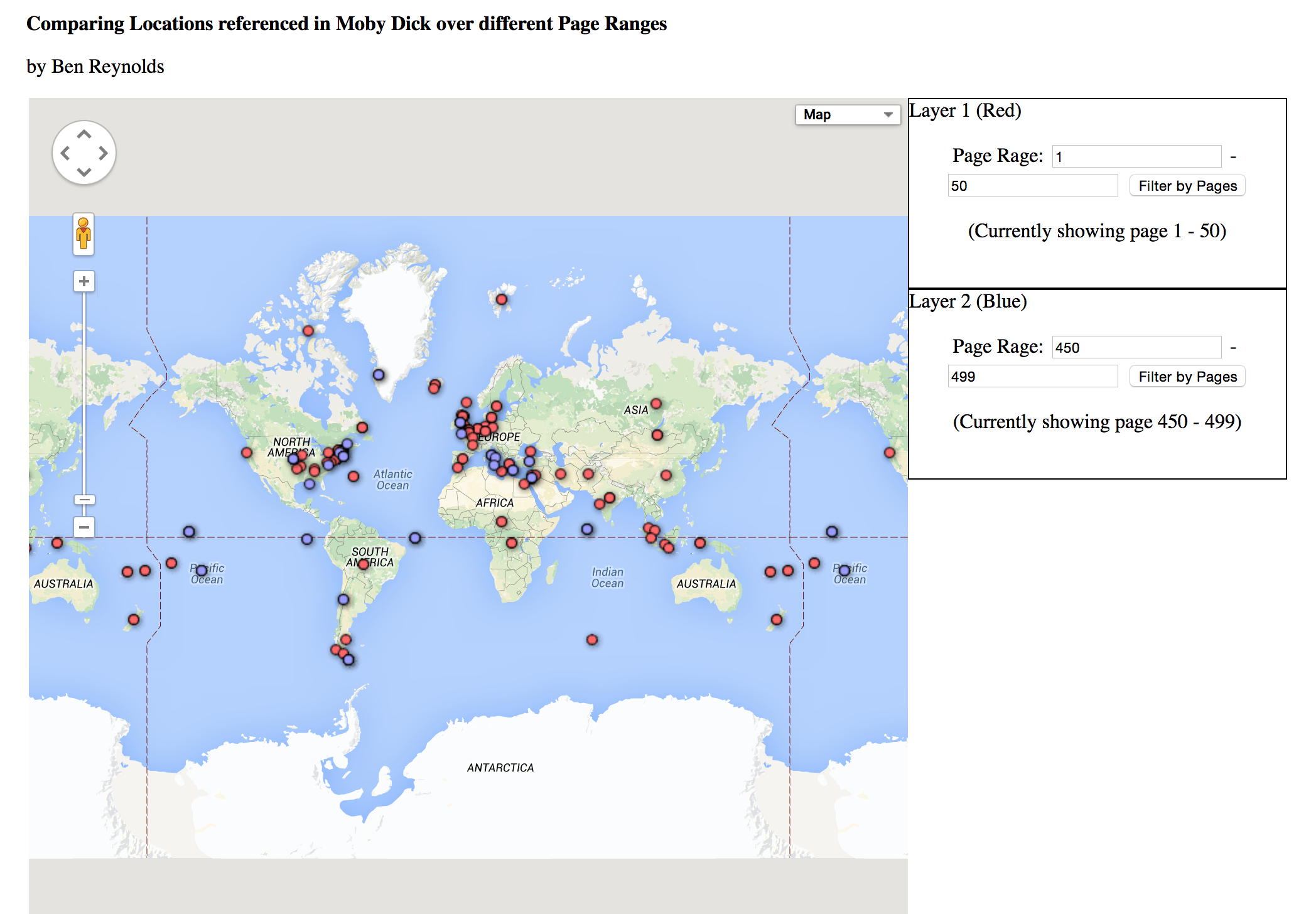

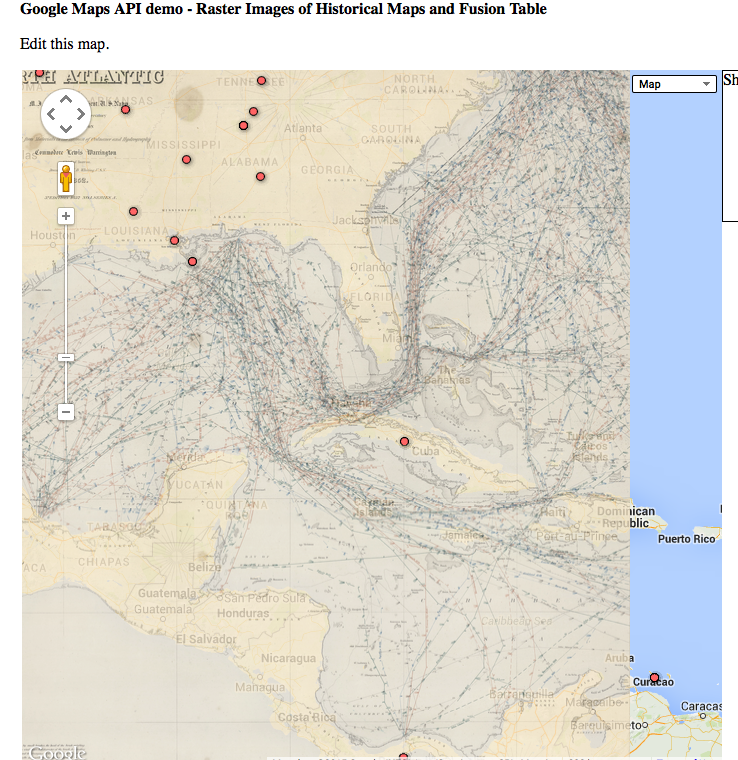

To address these problems, we want to make NewsConnect, a visual and engaging news webapp. We want to avoid walls of text, and make the interface informative, but not intimidating. We did this by putting news articles on a world map, associating the articles with the countries discussed in the text, and visually showing links between countries mentioned. A user will have access to the news they are looking for (with the help of our features) without having to skim through many articles, disrupting their workflow as they are taken to new pages with every click. Better, they can investigate a particular news issue over time in a geographic context. NewsConnect is also a way to discover trends in world news including coverage, source bias, and more.

IV. Background research

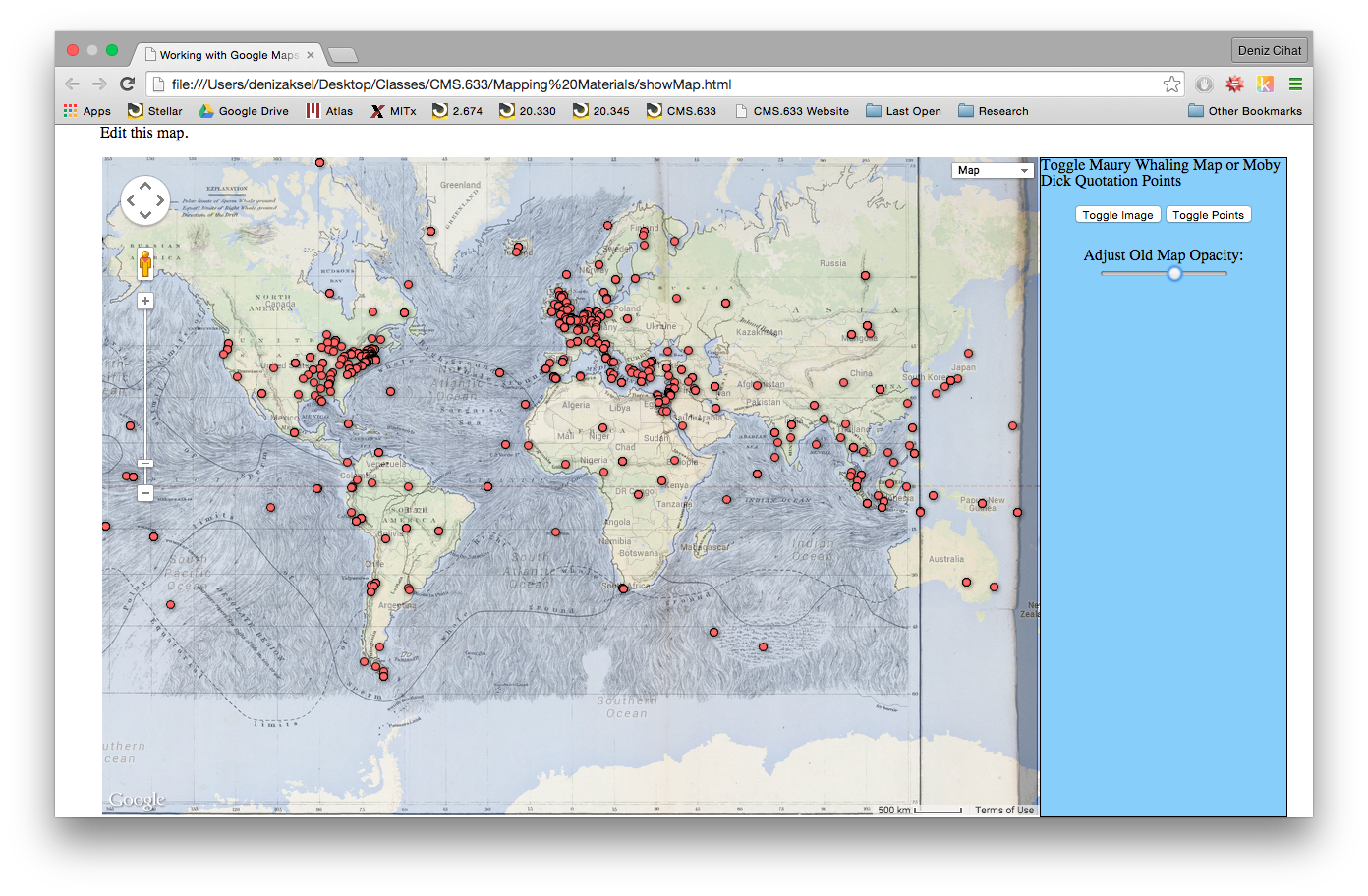

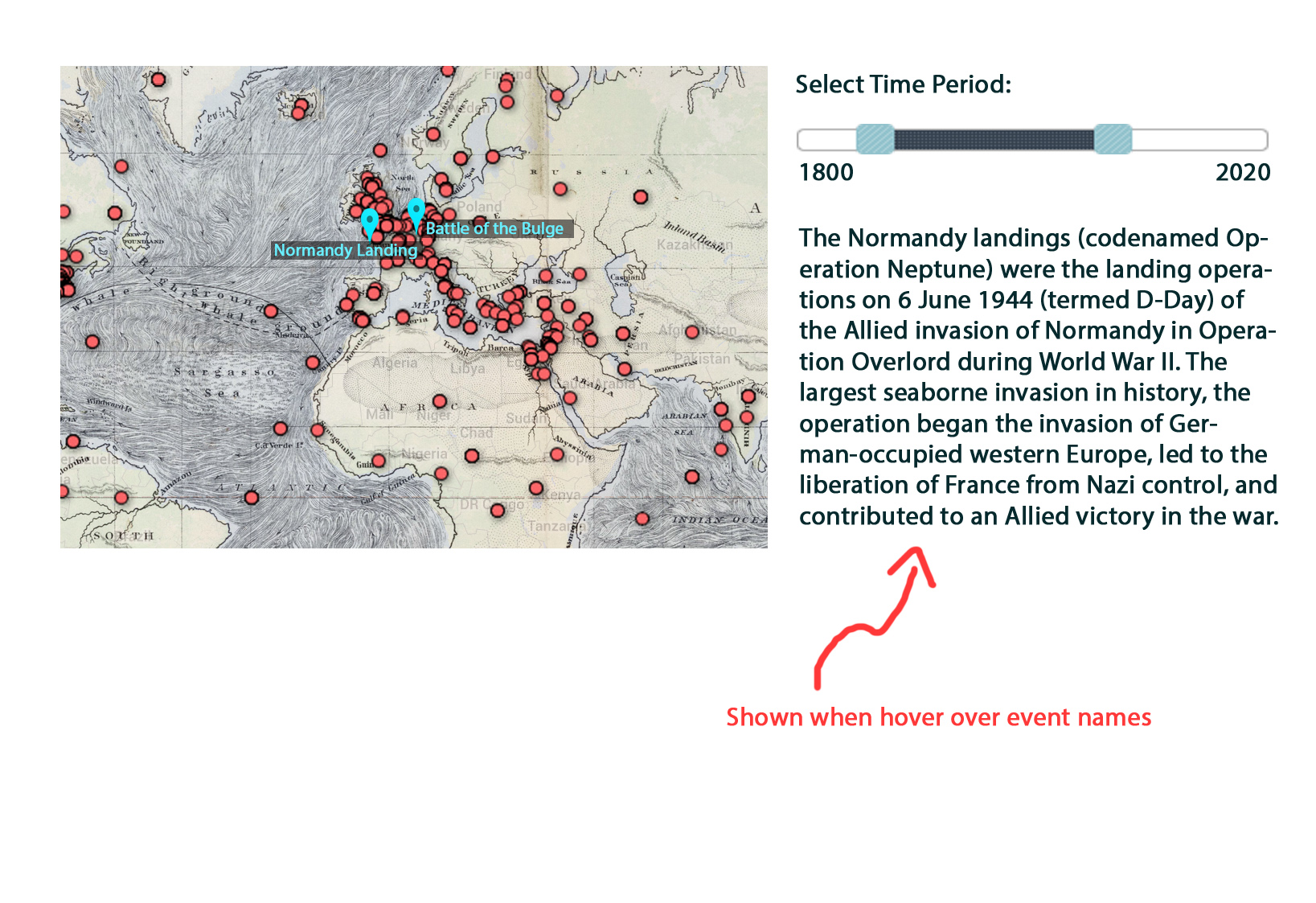

We met with Liam Andrew, and he showed us a webapp called Gistory.co. Gistory.co is a visual news interface that lays articles on a map according to the location of the events in the article. We thought it was a great idea to overlay news on a map to give a clear visual idea of the places mentioned in the article, and we wanted to apply this concept to world news — keeping with the theme of visually connecting news content to places.

The MIT Center for Civic Media conducted a project that we thought was pretty useful too — CLIFF. CLIFF extracts people, places, and organizations from news articles, and it’s goal is to understand what the article is really about, rather than an amalgamation of all the places mentioned. These ideas were particularly relevant to our project because at times, an article about Egypt and Israel would be linked also to Korea because a Korean journalist was mentioned. We didn’t use CLIFF technology in our project, and we took care of this issue later by using webhose.io to gather location data provided by the news sites themselves. Though perhaps in the future, we would want to use something like CLIFF to do this instead, to eliminate any possible bias that exists from getting this information from the content source.

V. Project Developments/Process

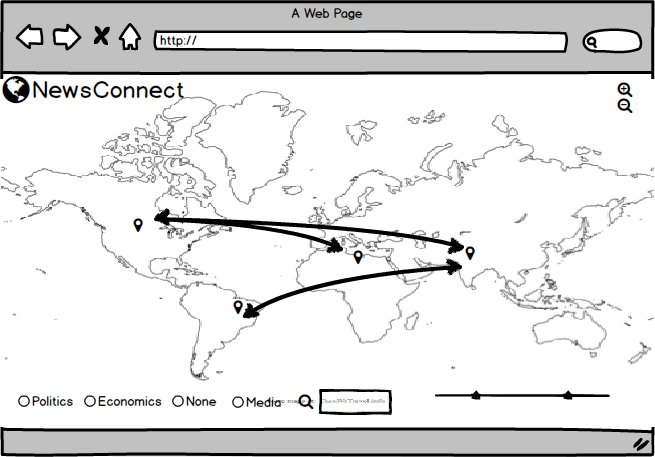

The first phase of our project was to prototype and determine how we were going to gather our news data. We drew some paper sketches of what we wanted our interface to look like [see below]. We began to scrap news data from CNN using a scraper we built using the Newspaper Python library. Choosing a scraping method is something that we continued to have problems with throughout the project because there were a variety of pros and cons between different software. We settled on webhose.io to scrape news data. With webhose.io we can specify which new sites we want articles from, specify a popularity feature (to only include articles with a high number of hits, to increase the relevancy of a given article), and we can input keywords we want the scraper to look for within articles. This helped us establish our category feature on our web app. We decided on a few lists of terms commonly associated with “politics” and “economics” and so forth, and the scraper helps us to allow users to sort articles with these parameters. In the future, this capability will expand to include many more search terms, hopefully in some automated way. Additionally, webhose.io provides location data for some news sources which helps us get around using geopy for location information for the world map interface.

The second component of our project had to do with the user interface. We really wanted the focus of the project to be making reading news a more visual and interactive experience. We decided that having a full screen map with pins and connecting lines to indicate which articles were associated with which countries was the first component of our app. We wanted connecting lines to be color coordinated with topic: all the blue lines, for example, could represent articles about politics, and red lines could indicate economics. We thought that radio buttons would be the most straightforward option for users to select which news topics they’d like to see, but this could easily evolve into a multi-option selection process in the future, when we have more categories and comparison/connection data. When a user hovers over a country’s pinpoint, a list of articles about that country appear. A user can then hover over a specific article, and then only the paths connecting that article to various countries will be highlighted, all the other lines on the map turn more transparent. We wanted users to be sure that they found an article they want to read before they have to travel to the news site and sift through the article. By the time they are given articles to read, they already know the article’s topic and the countries the article deals with. Additionally, a preview of the article appears on the page if a user clicks on the article block element — the user can read this short preview and decide if they want to click the article link to go to the full article.

VI. Enabling Technologies

Balsamiq: A wireframing tool we used to prototype our project, and to create a vision for future directions of the app.

Leaflet: Leaflet is an open-source JavaScript library for making interactive maps. We used Leaflet to generate the map and it’s features that are the main visual component to our app.

Newspaper +Stanford NER + webhose.io : Our first data scraping setup used the Python library Newspaper, and then scanned the articles that it scraped using the Stanford Named-Entity-Recognition tool for location data. This wasn’t the best process because the NER wasn’t as consistent or accurate as we would’ve liked (an article mentioned the animal “turkey” and associated the article with the country Turkey). The Newspaper library didn’t have a way for us to extract category data either, which we decided was a key point of our project. We found that webhose.io was much better suited for our project. It’s an API that can gather information from any number of sources, and allows us to query by keyword. With this, we selected sources that we knew would provide location data, so we were able to get accurate location data and sidestepped using the NER. Additionally, we were able to easily implement category funtions with the data webhose.io scraped.

Heroku: Heroku is a cloud service that takes care of server management, and we hosted our app using Heroku.

Flask: Flask is a Python library for micro web application frameworks. We used this for our server related code.

Geopy: We used the Python Geopy library to match location data we got from the NER and later, webhose.io to places on the world map. The library contains coordinate data, so we were able to place articles in and connect them with the countries that mentioned them.

VII. Journey Map

a. The user will see a world map, populated with news

b. The user can change what he or she sees on the map by doing any combination of the following: - Selecting a country to zoom in on by double clicking on a region - Adjusting the timeline range with the slider bars - Selecting a specific news topic by checking a labeled radio button

c. The user can get a list of the articles pertaining to a specific country by hovering over the pin in the country.

d. The user can hover over an article in the the list of articles and see a preview of the article appear on the screen.

e. A user can click on the link to the article they see (before or after seeing the preview) and the article will open in a new tab. Or if a user decides that the article is not one they want to read, they can close the preview, and zoom back out to the world view.

VIII. Future Directions

With more time and access to more robust APIs, we would like to incorporate more news sources from all around the world, have more comprehensive category/search terms, and a way of saving, sharing, and rating news articles users discover on our app. We’d like to incorporate a time line feature using a slider bar that a user can interact with to indicate a range of dates they’d like to see news from. Additionally, we wanted to have a “Zoom back to world” button, which would be the Globe icon next to the site title — a standard way of returning to the home screen of a site.

VIII. Works Cited

https://civic.mit.edu/cliff-entity-extraction-and-geoparsing-for-news-articles

https://gistory.co/

-

MuRoam Final Presentation and Paper

Hi all,

To see our current progress check out our final slide deck that we have prepared! Click here to be directed.

We have had a few issues with formatting on Annotation Studio. We have our latest draft on Google drive. Click here to be directed.

We are happy to answer any questions.

Cheers,

Team MuRoam

-

Link to dry run NewsConnect presentation

https://docs.google.com/presentation/d/1aTa7jq9VqeMWC1wvPXu-jygGD5yVP_0_UecxVVlr3sA/edit?usp=sharing

-

Design Document Draft

Wubify.me

Yanni Coroneos Massachusetts Institute of Technology, Electrical Engineering and Computer Science Programmer

Corey Walsh Massachusetts Institute of Technology, Electrical Engineering and Computer Science Programmer

Abstract

In this paper we will describe a novel medium for collaborative audio synthesis. By combining the achievements of previous products and taking into account the needs of the user, we have created wubify.me. We will show how users at every level of expertise can meaningfully benefit from the featureset and we especially believe that our shareable user-contributed block library can elevate the experience of audio synthesis to a social activity.

Concept

We envision a collaborative sound generation platform, accessible through the web so that both amateurs and professionals can create sound and have fun together. There are current technologies, such as SuperCollider, that can be used to collaboratively generate sound but they are mostly a niche product that is exclusive to audio professionals who are also expert programmers.

These technologies are powerful, and lend themselves well to our goal of sound generation, but are difficult for everyday users to access. Therefore, we decided to build a visual representation of the interface to bridge the gap in experience levels. We’re also able to leverage the nature of this visual interface to enable both real-time collaboration and a shared repository of reusable prior work.

Like some other programs, the users have a set of transfer functions that they can manipulate and compose. Filters, function generators, and even interactive elements are in the repository. A key advantage of wubify.me though is that this is all done in the browser, including the final composition step that actually plays the sounds.

Background Research

The need for wubify.me was realized when a hobbyist electronic music composer expressed interest in a visual composition system. He complained about the current status of SuperCollider and its lack of portability. The whole system is complicated and clunky which makes it difficult for artists to distribute their work. Additionally, the complications prevent new users from entering the scene. Despite these shortcomings though, there are several things SuperCollider does extremely well.

SuperCollider is the current leader in the field of algorithmic music generation. By allowing users to create and manipulate audio signals directly in frequency space through the use of basic building blocks, electronic music composition became more accessible for everyone with a computer. The basic principle of SuperCollider carries through to wubify.me: signals are shaped through a tree of transfer functions. For example, a sine wave might go through a low pass filter which then is fed into a block to modulate the frequency of a square wave. This direct manipulation of the frequency domain gives the user complete control of the spectrum of the audio signal. By combining this approach with a conventional sequencer, like is present in Audacity or garageband, the audio signal can be completely specified in both frequency domain and in time domain. This is critical for electronically composed music which often relies on un-naturally occurring sounds and spectra.

Project Development

Our application has a few goals:

- Improve accessibility of existing tools

- Utilize that improved access to allow collaboration

- Allow for persistence and reuse of the collaborative works

To accomplish these goals, we designed and implemented a proof-of-concept application with the following basic requirements:

- A visual interface to a tool like SuperCollider

- Real-time collaboration over the internet

- A shared, persistent ‘block’ library

The basic premiss of the proposed application is such: commutations on sounds or sound generators can be represented as building blocks, and any set of composed blocks can be represented by a single block. Starting with the set of “unit generators” (“ugens”) provided by Flocking.js, users can compose blocks to create more complex sounds and processing pipelines.

This premiss lends itself well to accomplishing all three of our requirements. A visual interface must simply allow for basic graph manipulation. Real-time collaboration is done by synchronizing the states of the visual graph representation across the users’ browsers. Finally, a block library must allow users to upload the data representation of their creations to a centralized location and then serve that information to other users alongside the standard ugens.

The implementation of this project decomposes into a few parts:

- Flocking.js Representation

- Graph Representation

- User Interface - Shared block library, real time collaboration

- Web Serving

- Persistence

The primary ‘brains’ of the technology is the algorithm that converts the graph representation into the valid JSON object that Flocking.js requires. This algorithm traverses the graph, at each node it generates a corresponding Ugen descriptor, populated with default values and user specified options. Finally, it sews the fragments into a cloth that represents the network of commutations in the frequency domain.

Enabling Technologies

Our proof-of-concept application would not be possible without the help of a long list of enabling technologies. Most notably:

Flocking.js - Flocking is a SuperCollider inspired JavaScript library that provides a similar interface and operation. It has the benefit of being executable in the browser - greatly simplifying the process of delivering audio output to our users

JointJS/Rappid - “A complete diagramming toolkit.” [1] JointJS is a JavaScript library that provides support for visualization and interaction with graphs. Rappid provides a set of higher level features that greatly sped up the development process of our proof-of-concept. While JointJS has a free and open source MPL 2.0 license, Rappid is a commercial product that was provided free of charge for our academic use.

Node.js - A leading, open source web-server platform written on top of Chrome’s high performance V8 JavaScript runtime. Node provides a platform for both our file serving needs and our application’s runtime requirements.

Journey Map

Wubify.me has a straightforward UI. The user simply logs in and is presented with an empty graph and a myriad of ugens on a left side pane. As mentioned previously, ugens are like function generators; Triangle waves, sawtooth waves, and square waves are among the basic ugens currently available. The user drags a few ugen blocks into the empty space and notices that they have input and output ports. By dragging arrows from outputs to inputs, the user can cascade the ugens. Mathematically, this is equivalent to multiplying transfer functions. Each ugen can have different inputs but the most common ones are add, mul, freq, and phase. Connecting an output to each of these ports either adds the output to the base frequency, multiplies the output with the base frequency, changes the base frequency, or changes the phase angle of the target ugen block. From there the user composes ugens and then hears the result by clicking the play button. If desired, the current cascade of blocks can be saved into a new super-block in the user block library by clicking the save button.

Future Direction

Our proof of concept application provides the basics, but we believe that this project could be taken much further. A built in sequencer would allow for more natural coordination of multiple generators. Currently, a similar effect can be achieved with multiple delay blocks and triggers, but the process could be greatly streamlined for the common use-case.

While our implementation is built on Flocking.js, only relatively minor changes would be required to make it work with SuperCollider. The UI would be virtually unchanged (aside from the available ugens); only a new graph parser would be required to generate valid SuperCollider code. The larger implementation challenge, however, would be integrating SuperCollider’s synthesis servers with the in-browser web application for near real-time sound playback.

Works Cited

http://jointjs.com/about-rappid

http://flockingjs.org/

-

Annotating the Air: Penultimate Update

Annotating the Air Documents:

-

Virtual Reality Museum - Paper Draft and Slides

Here is our Draft of our Paper

Here are our Dry Run Slides

Note that these may change a lot until the deadline on Wed, May 6.

-

Wubify.me

##Update

We have the UI basically finished. Parsing the graph and playing sounds also works now. There are some bugs and edge-cases in the code that we need to deal with but our next big goal is increasing the size of the block library and coming up with some cool demos.

-

Virtual Reality Museum Update

In summary, here are our updates:

- Built museum generation (floor plan, rooms, paintings)

- Designed and built most of server logic

- Display pre-fabricated rooms in Unity (and improve painting placement)

- Did research on curation practices and feminist art

For our full writeup, see here:

https://docs.google.com/document/d/1bbb01vIHIxEHEkSiOLgIfarJvd6RVrJncfkaPoKOr34/edit?usp=sharing

For our slides, see here:

https://docs.google.com/presentation/d/1QLM2gJioOXIVYdjxbQJ9lhLzHqQrFOBXd2DYK1o4msY/edit?usp=sharing

-

The Community Speaks for Itself - Write Up 1

Ariana created our first prototype in App Inventor (which can now post text, images, and sound) and explored the SoundCloud API. Sherry led a review of Halsey’s Round: Cambridge app together to decide on what we liked and disliked. The most important take aways were to ask relevant and evocative questions and to always have some media for the user. So, we decided to have 3 large range sounds that always cover our area. To do this, we decided to narrow our focus to just the Berklee campus and Berklee college culture. So, we also explored the Berklee campus together to explore the ethnographics and to think of good questions. Meridian kept notes of our exploration and research (online from Berklee’s website to Soundcloud to FB’s Overheard at Berklee and in-person contacts). Meridian also created some wireframes of the application and will continue to work on questions and starting media for the app.

-

NewsConnect Update 4/22

-

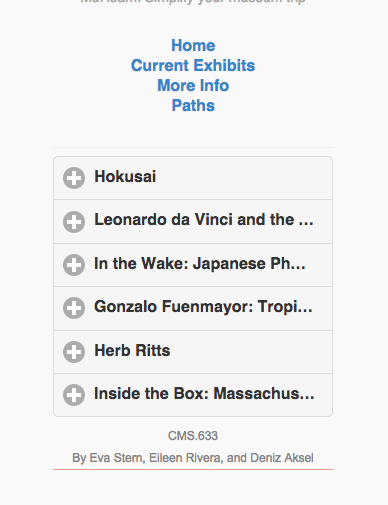

MuRoam Updates

powerpoint link: https://docs.google.com/presentation/d/1lfWwxOdCzc3zUUHZ-A7djCxrPDOFi8XlHHSuyg8EkiA/edit#slide=id.gae980478f_0_0

Thanks to the help of the Artbot team (Desi Gonzalez and Liam Andrew) we were able to come across many resources and readings on location based apps designated specifically for museums.

The first paper by Matthew Tarr from the American Museum of Natural History, was about indoor location tracking technologies and its implications for museums. Tarr made some very important points on the limitation of current indoor positioning systems (IPSs), which cannot be achieved via traditional GPS methods. Many large-scale companies (like Apple and Google) are now working on solving this problem, but as of yet, “there isn’t a standout winner”. We were personally restricted by this technological limitation when our most promising IPS IndoorAtlas failed to properly calibrate in the large rooms of the MFA.

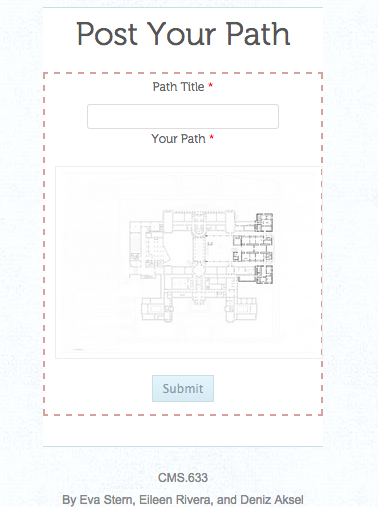

However, other points in Tarr’s paper, along with a project from the Metropolitan Museum was essential in providing us with new direction for MuRoam. The project for the Met, designed by an Intern at the Metropolitan Media Lab, is a “path generator”, that allows users to pick paths through the museum based on illumination, crowdedness and loudness of rooms. However, this automated path generator also comes with a HTML based toolkit that allows users to draw their own paths on any floorplan in the form of lines and nodes, and upload these maps in the form of a JSON file. This tool is extremely beneficial for us, as we hope to use it in our design.

So to keep our approach realistic for the span of this class, we will go about the “draw-your-path-as-you-go” approach for MuRoam. Users will draw their paths as they walk around the museum, double tapping at location where they wish to drop pins. With the pins, we not only hope to encode a pausing point by the visitor, but also other important metadata, such as user orientation (which direction is the person facing), wait duration, and annotations. We realize that this is not the most ideal approach, but given our current restrictions and resources, it is feasible. We want to design our app such that it is flexible for the potential implementation of IPS in the future.

We have had the opportunity to experiment and create beta versions of our technology. We developed a mobile site that holds a lot of the functionality we envisioned our application having.

One of the pages contains the current exhibits with user ratings. Upon reading/visiting the description of the exhibit, the user has the ability to leave a rating, which we believe is a simple yet powerful form of user participation.

Additionally, we have a section for drawing paths. On this page, users have the ability to upload paths they created and view other user created paths.

Implementing our iOS application has proved to be a challenge, at this time we will work on developing polished mockups of our application, but keep the functionality in the website. We now hope to add the augmentations mentioned above (direction encoding by replacing node circles with arrows, encoding of wait durations via node size) to the path drawing page weithin the next couple of weeks. Finally, we will be thinking of ways to make our app a better participatory tool by thinking of ways to make the interface more user-friendly and intuitive.

-

The Community Speaks for Itself

-

vrmfa - the virtual reality museum for all

https://docs.google.com/presentation/d/16oe5IiNOX1D1_kAxPiQXYYzb28FZLFP-4t4bg4GrY0o/edit?usp=sharing

-

MuRoam

Presentation Link: https://docs.google.com/presentation/d/1wT6H9HVkyfU3_-6tdJORLNpIWHQC66FI7-5br9iwhpU/edit?usp=sharing

-

Wubify.me Captain

Problem Statement

Collaborative audio generation.

There is currently no very good way for people to collaborate on audio generation. Currently, one must have advanced knowledge of various complex software options which is not very accessible to your every day internet user. We would like to provide an avenue for people to contribute to and build from a centralized library atomic units of music generation.

Approach

There’s a type of audio creation toolchain called Supercollider that lets you programmatically create music/sounds. You can define types of signals or modulations and then cascade them into new sounds which can also be operated on. We want to make a website front-end for this system. We envision a site which has a “block library” of sounds that each user can use in his/her sound generation. Additionally, multiple users can upload new blocks so that everyone can use them in their own designs. In this way, we will create a collaborative system for people to make new sounds.

Audience

People on the internet who are interested in music generation and/or are bored.

Skills Needed

- Knowledge of Web/GUI technology

- Javascript, Clojure, Object Orientation

- UI/UX

Presentation

-

NewsConnect

(This project may need a better name.)

Problem statement The newspaper has been around for so long that its categories and text-based format have become “the way things are.” But in reality the categories aren’t so intuitive for seeing the larger visual picture of what’s been happening to the world. Some issues, for example, are easily neglected when the next “in” topic comes by. This application is meant to bridge those gaps.

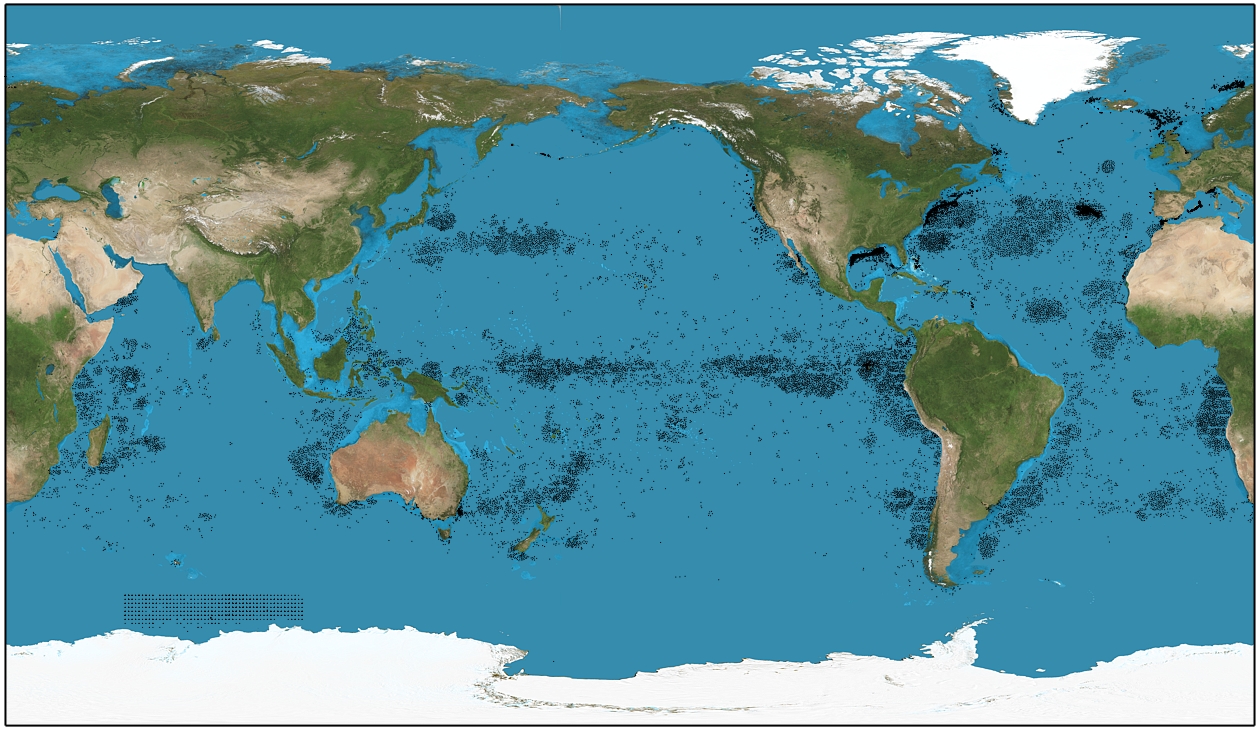

Approach Take years-worth of news data and display it as points on a world map, with heat maps and line connections between countries to show country relations and popular world issues over time. Each news item marker could get its own blurb headline with links to news articles for more information.

Audience People interested in international relations could make use of the country connections and heat map of news over time. People who don’t like reading news as text but want to get up to date on modern world history could use it as a visual tool for learning. People involved in non-profits could use it to identify issues that are still ongoing but passed over in the wake of more popular news, such as in small third-world countries that don’t have much attention.

Skills needed App programming, UI design, familiarity with news sources

http://prezi.com/tcb4w-rhosft/?utm_campaign=share&utm_medium=copy

-

MIT Museum

I visited the MIT Museum. While many people within the MIT community visit this museum at least once over their enrollment in MIT, I was curious to see how other members of the general public acted while in the museum.

I visited the museum around noon on a weekday so the primary atendees appeared to be families with young children. There were a couple older groups around and some adults as well. As the museum is only open from 10am to 5pm, I feel like most weekdays are similar to this one as other demographics will be in work or school.

As would be expected for a museum with MIT in the name, many displays had some sort of tech associated with them. Two stuck out to me in particular. First, on the first floor, there were many interactive videos detailing different teaching styles at MIT. I was intrigued by the presentation style as they covered topics such as “why math is important” and descriptions of the edx program. While these topics are not as flashy as some exhibits that could be shown, one woman was intently watching one of the videos when I visited.

A second interactive technology was the ability to recreate several of the scientific photographs on display. The museum displays several of these images outside and I thought giving attendees the ability to recreate the images on their own added a enticing and interesting way to interact with the exhibits and learn the science behind the pictures.

From what I saw many exhibits encouraged hands on participation (mind et manus), and visitors took advantage of this. The only instance of unintended use that I observed was a station meant for working out problems that a couple of kids were drawing pictures at.

I think a reason for lack of unexpected use is that the museum does not encourage long amounts of reflection, which means that visitors, especially children, are encouraged to move through at a quick place. While the space embodies many of the quiet, solitary attributes discussed in the MFA visit, I believe that to be more due to number of people present, rather than design. I am not sure more technology will help this probably, but perhaps a different design which encouraged longer at each activity.

I think the MIT Museum struggles with visitor due to location. As mentioned above, they use a large image of a bouncing ball on the side of a building, to alert people to the museums presence.

In addition, there is a sign saying they are open outside.

The biggest interaction between the outside and the museum comes from a clear glass window which allows visitors to see into the museum. Additionally there are screens displaying video in this window. Unfortunately I do not think either of these entices visitors to the museum. The screens are hard to see in the daylight when the museum is open and the area seen through the window has relatively few interactive displays compared to the rest of the museum. Once inside, navigation is not initially intuitive, which further complicates museum visits.

-

Geographic and Time Based Hip-Hop Music Evolution

Problem Statement:

Music can be an important indicator and influencer to cultural shift and movements. Being able to analyze the historical development of music across time and space can be a helpful resource in analyzing these trends. Rather then simply reading song names, being able to listen to the music would add an additional level of understanding.

Approach:

In order to display both geographic and chronological trends, I propose a web site that shows the major cities for hip-hop development. A yearly timeline can be stepped through starting in 1970 until 2015. Selecting a city brings up a sampling of the music being created in that location at that time, as well as a description of the style. The music blurbs will be similar to those on: http://techno.org/electronic-music-guide/

Thus audiences can track changes over time as well as changes from place to place, while listening to the movements as they occurr.

Audience:

This website can be used for Hip-Hop aficionados looking to explore hip hop music or music historians looking to develop an understanding of trends across time and space.

Skills needed:

Web-development, Database management

-

Final Project Pitches: Annotating the Air / Urban Libraries 2.0

My pitch is very much up to be changed. I would love to grab the attention and ideas of any students interested in moving beyond rectangles (display screens, computer screen, or piece of paper). Specifically, I am interested in concepts of the third place, augmented spaces, community involvement in urban libraries, and getting DH tools in the hands of communities (I consider myself a techno-constructivist so I want communities to use technology to use technology to learn about and construct their own experiences). With all that in mind, I have two project pitches.

Pitch 1

Project Title: Annotating the Air – Community Histories, Diaries, and Catharsis

Problem Statement: I feel that although there are multitudes of rich sources (typically academic content) for Digital Humanities, communities also have important stories to tell. I want to create a technology that puts DH tools in their hands and allows them to learn about each other, themselves, and the technology.

Approach: I imagine a mobile application rooted in AR ideas. While exploring community spaces, a community member can leave a digital trail of memories, voice recordings, words, photos and videos attached to particular locations for others to stumble upon. It would be like geocaching taken to the next level.

In first iteration, the medium could be a mobile application but I would want further iterations to allow the medium to be more ambient and ubiquitous (a non-disruptive experience that unanchored to a typical screen… that in fact only some people can access).

Audience: I would want all members of the community telling their stories, so the application would need to be usable by people of all ages and technical abilities. Skills needed: For this project, I would love another person to brainstorm and design with but I really need 2 people skilled in mobile native application creation (preferably iOS). I am a programmer as well, but I am unfamiliar with mobile applications so I would need help getting started and knowing what is feasible for our timeline.

The foci would be: making the experience of content pulling and content creation as easy as possible for users, creating a backend where this wide variety of content is geotagged and accessible to users, and learning to use the phone’s geolocation system (would the app constantly run and constantly take the user’s location?).

Pitch 2

Project Title: Urban Library 2.0

Problem Statement: Due to my experiences in DC Public Libraries, I find myself yearning for technological innovations in more library spaces. I am used to having my library be (at least the beginnings of) a hub of tech innovation / a maker space. Libraries must be early adopters of interesting technology. In addition, library resources are continually hidden and undiscoverable.

Approach: I want to create a better library experience with ambient user interfaces (such that it would augment but not disrupt reading and discovering processes). I would like to design a better library experience in which you can find and preview books, interact with other readers, create art with text, and leave a digital footprint.

Audience: As a mobile application, targeting high school college students and older. As ubicomp, all library users

Skills needed: I need another designer to help me brainstorm and design. I also need 2 programmers (they would be free to pitch a medium they are familiar with to host our new library experience).

-

Final Project Pitch - (Never) Lost in Museums

Problem Statement: As someone who is very directionally challenged, whenever I’m in a big museum or even a medium sized museum (MFA in Boston), I have a lot of difficulties knowing where I am. Sometimes I find myself going back to the same section of the museum all the time unable to find the sections I actually want to go to. I think it would very helpful to have a mobile app that maps out different recommended route of the museum. In addition, if the party is separated during the visit, it is often awkward to call each other in a quiet museum. If there is a way to connect friends’ phones, it would be much easier to find each other in a museum.

During my visit, I would love to not have to worry about getting lost and focus on the exhibit itself. For someone like me, it is hard to do so without a good real-time map.

Approach: I envision this being a mobile app with the layout of the museum pre-loaded and can track the location of the visitors by use GPS. Users can also tag their location on a map by scanning a QR-code, bar-code or taking a picture of the nearby exhibit. If the users tag a certain exhibit, they will also be given additional information about the exhibit.

I don’t think we will be able to implement this part in this class but it would be cool to give children some kinda of braclet that has a GPS tracker or a scanner in case they get separated with their parents in the museum.

Audience: The audience would be anyone who wants to stop scrathing their heads trying to understand the paper maps of the museums and anyone who has trouble finding their way around in buildings with complex layout. It will also help people who want to get more information on each specific piece of art or artifact.

Skills needed: Mobile app development skills especially with GPS location tracking, UI/UX design, and maybe data scraping as well.

-

Final Project - Museum a Day

I’m not great at outlining these so I’m just going to follow the sections outlined in the handout:

Problem Statement:

Museums can be difficult to approach, occaisionally pretentious, and often expensive or difficult to get to. Also, many museums feature only works that could be considered “fine art” or otherwise well-known pieces in an accepted, established style. I want to create a museum that is easy to access, open to all types of artistic works, and most importantly, free.

Approach:

The Museum a Day project is a virtual museum, automatically generated or hand-curated in special circumstances, that can either be explored in a browser or through the use of a Google Cardboard headset and a phone. I will cover the automated case, as a hand-curated museum is fairly easy to imagine.

First, we gather art through a web-scraper, not only from established gallery websites that contain images and data on fine art, but also from websites such as DeviantArt, to gain a mixture of classically expected museum art, and strange, unconvential art from creators on the Internet.

Next, using a set of predefined “museum rooms” that the team creates the system will generate a museum and populate the rooms with the selected art. This can be thought of as having a bunch of empty rooms with defined “art spots” and then mixing and matching until a museum is made and filling the rooms by sorting art according to some clustering or semantic matching algorithm. The algorithm to determine what art goes together in what rooms should be thought of as building a virtual curator. It could care about metadata attached to the art (tags, descriptions) or maybe the visuals of the art itself (clustering similarly colored, composed, etc art). That is more a question for the group to answer together, though.

Finally, the newly-created museum will be uploaded to a server, where users with the app can download it and view it in 3D on their phones, or else use a browser to access the museum. In this way, we’ll have created a museum with a very different collection of art that is accessible to anyone with an Internet connection (which, of course, is not everyone).

Audience:

The audience for this project is mainly a younger audience, probably under 30. The reason I say this is that not only does accessing Museum a Day require finding a technologically-accessed museum interesting, the museum itself will be populated by a mixture of classical art and strange art collected on the Internet. I personally feel that appeals to a younger, more technologically-focused group of people who would be interested in seeing art but perhaps not interested in going to a museum.

Skills Needed:

Web Scraping: I am not a web developer, and the methodology for automatically finding and acquiring artwork from the Internet is a task that frankly leaves me very scared. I’m sure it’s not impossible, it’s just outside of my realm of expertise

Designing Museum Rooms: I would like the museum rooms to be hand-created, rather than automatically generated. This way I think they’ll look nicer. I’m not a great designer, and someone with an eye towards architecture/user experience could perhaps have a better idea of how to build the museum rooms so that they fit together nicely.

Building the game/museum generator: This I am good at. I have built many games and particularly am proficient with the Unity3d game engine, which supports Google Cardboard and browser interaction.

Building a server to host the Museum a Day: I don’t know much about servers and websites, so someone (perhaps the web scraping specialist as well?) who enjoys putting together a website that could deliver the Museum a Day would be great.

That’s the Museum a Day. I’d love to work on it, and I hope maybe others are interested as well.

-

de Young Ethnography

I visited the de Young Museum, an art museum in San Francisco. (I was in California during Spring Break.) This museum mostly seemed to have an older audience, though there was one group I saw around my age and some families with children. The outside of the museum is a beautiful park of trees and fountains, and the lobby is spacious. The museum has two floors and a basement where they keep a rotating exhibition you have to pay extra to visit. I didn’t end up visiting the basement.

The exhibits in the museum covered a range of mediums including paintings and sculptures, furniture, textiles, and old pottery and artifacts. They were grouped in collections, often having little description for individual pieces but a little blurb for each collection of items.

The museum didn’t use much technology as a whole. It did offer an audio tour. There was also a total of four displays in the museum. One was an art piece itself, a display split into many panels which transitioned between different photos of nature scenery. (As it was a borrowed piece, I could not take a photo of it.)

The second was in the section on pueblo pottery, showing how the pottery was made. Unfortunately, it was quite close to the loud lobby, so I felt like having a display with sound located right there wasn’t optimal in placement. But it was nice to be able to see the work put into the pottery. I did see some people sitting on the bench right there and watching the video.

The third was also a video on the art pieces being displayed in the room, except it was a large projection onto a wall rather than a small TV, probably since the room was quite dark and the pieces being displayed were also much larger. (They were large pieces of jewelry from Indonesia.) I didn’t have enough interest to stay and watch.

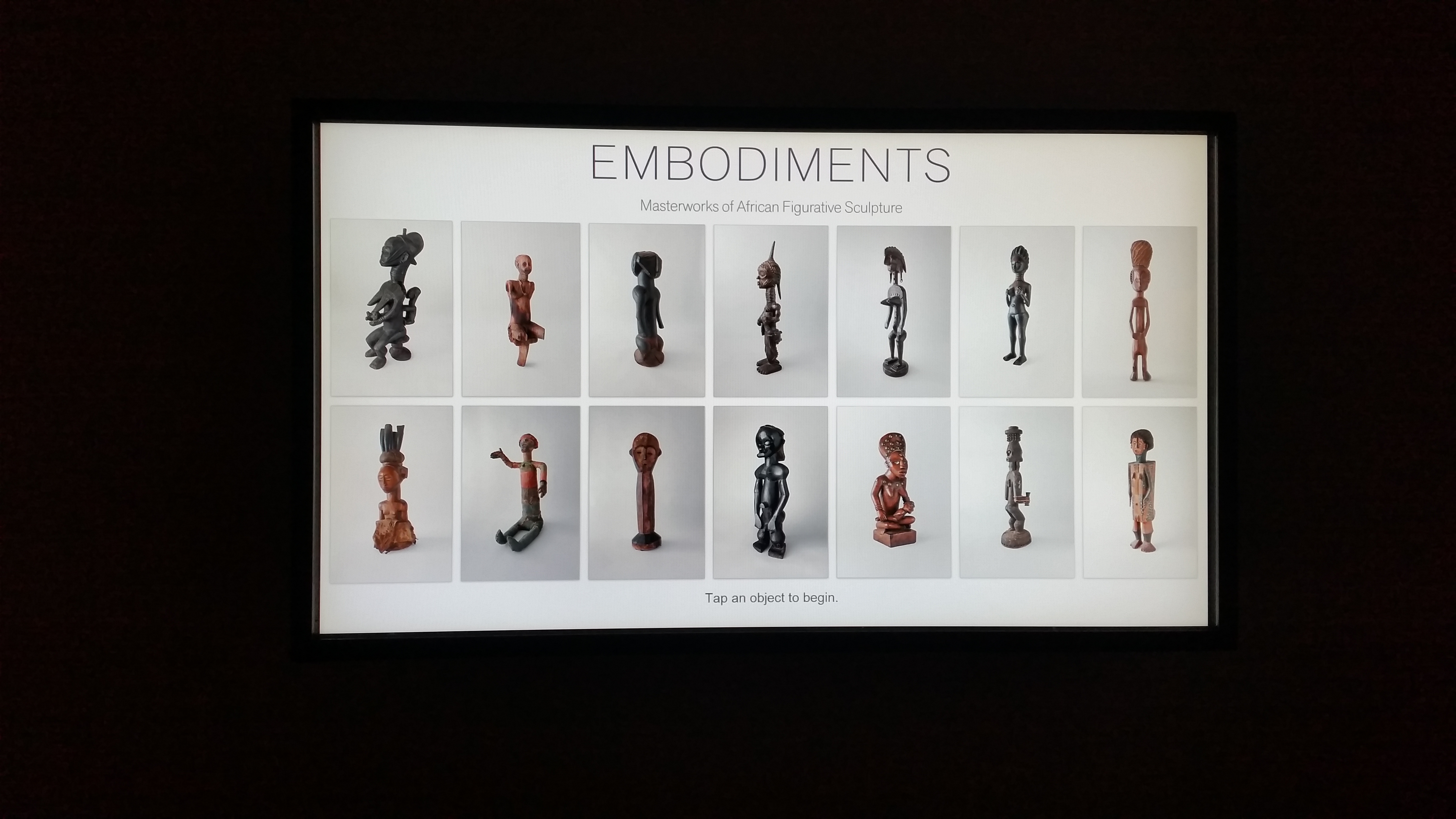

The fourth was a really cool display that was actually interactive, but it was hidden in a small corner behind an exhibit of African figurative sculptures. I almost missed the little alcove, but for my mom pointing it out. In this display you could look up more information about some individual sculptures, such as how scientists figured out what materials made up the sculpture, and sometimes some extra history. I found some of the pieces fascinating (I didn’t imagine that the wood sculpture with the snakeskin pattern was using actual lizard skin), and I wish they could have brought the display more attention. There were only about ten sculptures featured in this display.

Coming to this museum with a different perspective, I realized some things about their use of space. The rooms with paintings were more wide and bright open spaces, incorporating skylights and parts of walls turned into windows. I did like that use of glass window-like walls in some portions of the museum. You could even view a sculpture display outside from in one room, and it was a bright sunny day, which made it work really well.

The rooms with older artifacts and pottery were much darker and smaller rooms, probably a factor of trying to preserve the artifacts, but it also made the space feel a bit more confined, especially near the loud entrance.

I didn’t like that they hid this one interesting exhibit room on a textile collection behind a souvenir shop. I didn’t even recognize it as an exhibit at first. The huge set of drawers contained random samples of textiles from the collection, like a surprise in each drawer. It’s a neat way to display the pieces, but I imagine it would not have worked so well had the room not been empty of other people. There was also a giant (but restricted access) textile library past the room.

One other criticism I have for the museum was that it wasn’t always clear where the artifacts were from in one exhibit upstairs on New Guinea. I missed the large plaque titling the exhibit (I took a slightly different path to enter) and reading the little descriptions of the pieces only mentioned the “northern coast” or “Asmat people” or some river or other unrecognizable and vague terms, and I looked for a while before I finally asked a passing tour guide where the artifacts were from. There wasn’t even any map of the region anywhere. That could be one place it might be useful to see technology—knowing where items are from in a historical exhibit.

Overall, though, it was a fun experience to go through the museum. It wasn’t too loud or crowded that day (other than near the lobby), and the exhibits were fun.

-

The Museum of Science

Corey and I decided to go investigate the museum of science because we’re engineers and we thought it would be more appealing to us than the MFA. Given its name and prime location, we both thought it would be filled with modern interactive exhibits which really leverage the power of technology to convey ideas. We were wrong and the experience was actually quite disappointing. Throughout our entire survey we kept getting the impression that the museum was stuck 20 years in the past.

Almost every exhibit had a large amount of signage and descriptive written material. Additionally, there were audio devices that would read to you exactly what was on the text. On one hand, this is nice for accessibility reasons, though it is a little sad to see that they weren’t doing more with the ‘multimedia’ side of things. The descriptions were of course static text, and the one multimedia element was actually just another rendition of the existing information. There were however a few instances of the museum using the existing technology for things other than just reading the text, such as this exhibit that would allow you to hear various bird calls.

A relic from the 1989 Chernobyl disaster

This is a poorly done AR (augmented reality) tour of a recreated 19th century mansion. This room is real and you stand inside a control room where this image appears on a screen. From there you simply interact with the screen to explore whichever topic interests you. The problem with this is the very clear disconnect between reality and virtual reality. The program running on this computer is essentially a smartphone app and the actual, real room just serves as an enticing background. To make this better, the room needs to be the focus and not the screen. Maybe the museum could acquire some smart glasses and actually produce an AR tour where you can walk up to an object and some small description would appear in your view. Sure, smart glasses may be expensive and new, but this is the Museum of Science right? Show us cool new science.

Although there were clear attempts to encourage user engagement and incorporate interactive elements, almost all of them required museum visitors to initiate the process. They weren’t captivating in the sense that passers-by might stop and explore; there was always a button that you had to press before something interesting occurred.

This raises the question of what can museums and various public institutions do that will “reach out” and grab attention, without being annoying or having barriers to entry. I think that the Museum of Science could improve this aspect of things simply by replacing old technology with more modern and user friendly renditions. For example, the touch screens that they did have were all very unresponsive, which made the theoretically simple task of poking an animal to learn more about it more challenging. Similarly, most of the devices were running software that simply looked old and unfriendly to users that are well versed in the ‘web 2.0’ world.

-

The Virtual Museum of Bad Art

Problem Statement

One drawback to museums that we found in our exercise at the Museum of Fine Arts was the rather elitist nature of many museums. With the advent and subsequent proliferation of personal art and media hosting websites, such as DeviantArt, Flickr, and Vimeo, we can see that there are many artists in the world whose work is not and will probably never be featured in a material museum, whether due to obscurity of the artist or quality of their art. This project hopes to highlight these exclusionary tendencies of museums by creating a dynamic virtual museum of bad art.

Approach

In this project, I hope to create a “Virtual Museum of Bad Art”. By “bad art”, I am referring not only to the quality of the art, but also the value judgements made by traditional museums that relegate this art outside of the museum space. I hope to build a virtual, web-hosted museum that features art scraped daily from personal art and media hosting sites, such as those mentioned above. Some extra features might include human-curated exhibits, machine-curated exhibits (perhaps through a ‘search’ function?), and a mechanism to allow visitors to curate their own exhibit.

Audience

My audience is anyone annoyed by the elitist nature of some art museums, artists themselves, and people who want to curate art exhibits on the web. I less expect this project to be a useful, lasting tool; rather, I see it as an exercise or an art piece in itself.

Skills Needed

Scraping, web programming

Note: I do not currently have a group for this idea; if you have programming skills and are interested in this project, please let me know!

-

Studying Urban Libraries

Inspired by the Urban Libraries Council reading, I visited two of the three Cambridge Public Libraries. The first was the Central Square Branch of the Cambridge Public Library System. The second was the Main Cambridge Public Library.

The demographics of the Central Square Branch are varied widely (intending to serve the entire community). On a Monday afternoon, there were pre-teens hanging out, older homeless people resting and reading, non-native English speaking adults working in the Literacy Program, and college students enjoying the quiet and the Wifi. It was a rather intimate space, warm with brick and wood furniture (so intimate I was uncomfortable taking pictures).

The demographics of the Cambridge Branch were completely different. On the same Monday afternoon, there were nannies watching their children, and college students snacking in the café and using their Mac computers with hipster-looking adults. It was very lively for a library. The space was grand and open, full of light and glass. Some places were roped off with velvet rope and the community room remained mysteriously locked. Although an awesome resource, I found the space intimidating.

The Central Square Branch lends out Nooks preloaded with books (a practice common to all Cambridge libraries). The library also had computers in the Literacy Project space, catalog computers, and a computer lab. I saw all of these technologies used, except for the Nooks.

The main library had a lot more technology. In addition to the common library technologies, large monitors acted as bulletin boards (with information ranging from transit congestion to calendars of library activities). There were also machines for accessible reading and printing (a braille machine and magnifying readers). In addition, there was an amazing upright scanner in the archives. I loved the open access to unusual resources.

However, neither library really capitalized fully on innovative uses of technology to foster social interaction or discoverability of library resources.

-

Okay, I'm Here. Now What?

Problem

Some museums are quite large and have content from many different fields. For example the Museum of Natural History in NYC has displays on animals, physics, and Ancient China. It’s easy to enter a museum and feel excited and overwhelmed, even with a museum map in hand; there is simply so much to see and sift through. Sometimes this leads to you wandering around the ancient history section walking past every pottery display in hopes of seeing cool prehistoric weapons.

Approach

This would be an app that will measure the amount of time you spend at a given exhibit (this will give curators an idea of how popular certain displays are) and provides a list of “other exhibits you might like” that updates when the app recieves new information. So if you are at a dinousaur exhibit but skip all of the chemistry exhibits, the app might suggest an exhibit on monkeys. The app would cross reference time spent with tags/keywords that curators associate with each exhibit. The suggestions could include a map showing current location and path the suggested display and maybe even a “how good was this suggestion?” (out of five stars).

I have no idea how to implement this app, I imagine each exhibit would need some sort of bluetooth signal to communicate with the app since apparently geolocation inside a building is hard and largely not accurate. The app would pick up the signal when the phone is within a certain physical distance and keep track of when it first picked up the signal and when it last picked up the signal. Again, I don’t really have a clue about how this would work and there is probably a better way of accomplishing this.

Audience

This tool would be useful for the average tourist or avid museum goer. It would also be of use to the curators of the museum so they can get a better idea of what types of displays are popular and maybe figure out why others aren’t so popular (maybe they are out of the way, in a typically crowded area, or surrounded by more popular displays).

Skills Needed

- Someone who can make an app.

- Someone artistically inclinded to help with graphics/design/mockups.

-

New England Aquarium

I didn’t actually have a chance to go to any of the museums listed this past week since I was out of town from Mar. 18 to Mar.29. My schedule also doesn’t allow me to go any time this week before the class. I figured the best alternative I have is to do this assignment on a place I’m very familiar with. Although I’ve been to the MFA, Musuem of Science, Harvard Museum of Natural History, and Boston Children’s Museum more than once, I have been to the New England Aquarium 5 times in the past five years. I think I’ve seen almost every corner of the aquarium including the the 4D theater. Therefore, I believe it is fair to complete this assignment without going there again. All the pictures in this assignment are chosen from the pictures I took during previous visits.

##Demographics: The aquarium attracts many families especially family with young kids and K-12 school field trips. Though the aquarium also offers private event hosting - for example, MIT 2015 class council had two events there in the past. The aquarium offers a fun and relaxing experience while providing educational values at the same time. These qualities make it very attractive to parents and educators.

##Technology: The aquarium has small boards that introduce the species next to each tank. Visitors often skim the text and not actually read them because they are often long and not interesting to the kids. The lighting is also very dim so it is hard to read things. Making these boards more visible might encourage people to use it more. I think it would also be helpful if the visitors can download an app for the visit and automatically show the information if the visitor scans a QR-code on a display next to the tank.

One example of an interactive display is the sound examples at the penguin section that allows visitors to press buttons and play the sounds of the different penguins. I personally loved pressing them I was there but I didn’t see very many people using it. Younger kids who might benefit more from this feature often can’t see or understand this. Making it more intuitive to younger kids will make is more useful.

##Unexpected/Unintended Uses: There are very few misuses of technologies at the aquarium since there isn’t anything complicated to use.

##Frustrations: One of the biggest issues I experienced during my visits is the visibility inside the aquarium. It is understandable that the lighting needs to be dim for the health of the animals. However, the dim lighting makes it very hard to locate friends or family members if the party is separated. Technology could definitely help here. If each visitor is given a bracelet with a tracker inside and is able to activate tracking of each desired tracker on their smart phones, it will be much easier for parents to find their children or for friends to find each other during the visit.

Main hallway of the aquarium.

##Interior/Exterior: The exterior of the aquarium is very modern and cool looking which can attract people to visit.

It is also located near the harbor and is generally a very touristy area.

Visitors can see the seals through some glasses before they even enter the aquarium or when they are waiting outside for the rest of the party. This makes the aquarium more welcoming and intriguing before the visit.

Although the aquarium doesn’t have a lot of technology-based features, it does offer fun interactions for the visitors by having a “petting zone” and animal performances. These features allow the visitors to be more engaged during their visits.

The stingray shallow tank is one of the most popular spot at the aquarium which gives the visitors an opportunity to feel the stingrays as they swim by. It is fun and unique for both the adults and the children.

The rotating hallway around the big tank not only conserved space but also allowed the visitors to have a fun and unique experience.

At the top of the big tank, visitors can see the sea turtles and other animals close up without the glass interference.

Overall, the aquarium welcomes its visitors by having a very fun-infused environment that allows visitors to have a deep engagemnt with the environment.

-

MuseRoam – A Social Platform to Design Personal Museum Experiences

Problem Statement:

The museum experience is often something very personal or shared with a small social circle. Especially in the case of open-roaming museums, where there is no designated path to follow, there is often an opportunity for visitors to generate their own narrative by experiencing different works or displays in different orders. Sharing this personal experience with others that have their own unique experience is often enriching for both parties, expanding their perspective on exhibitions. This is why a simple, museum-specific platform to share personal experiences and routes through a museum would be a very powerful digital tool that allows inspiring dialog among much larger groups of people.

Approach:

MuseRoam would act as a combination of a map tool with annotation capabilities. The app would scrape and organize floor maps of various museums from all over the world and across different concentrations (art, science, history, etc.). Using simple drawing mechanics, users can either draw custom paths through the museum, or use position tracking to have their own experience tracked and projected onto the map in an automated manner. At any point on the path, users can add nodes, which they can annotate with tags, text, hyperlinks, and images. Users can then save their paths, share them with either a select group, or make it open-access to the whole user community.

An additional feature can be integration with museum view capabilities of Google Art to allow “digital tours” for people that aren’t able to visit the museums.

Audience:

The audience for this app would be anyone who is visiting, or wants to visit a museum. Additionally, the app would be a great platform for people that have already toured a museum, and would like a different perspective on things. It would be open access for anyone to contribute routes and experiences, however user ratings, or collaboration with institutions can help create “curated experiences” for people with different expectations from the museum.

Skills Needed:

Visual design skills, experience with mapping, IOS or Android programming, (potentially) work with street/museum view Google APIs.

-

MoMA Ethnography

MoMA Ethnography

Over break, I visited the Museum of Modern Art in New York City. The building has a modern white and glass exterior, with a large open lobby where screens display current or upcoming exhibits and events, which then leads to a modern sculpture garden that is open to the public. The audience in the museum varied greatly from very young to very old, though certain age groups gravitated to different exhibits with the museum.

The MoMA is a huge museum, and the audience and technologies changed drastically given the floor and the kind of art displayed in an exhibit. Currently there is an exhibit on Bjork, which I did not have time to visit, but the line to get in was long and fairly young. Even from the exterior, I could tell that the exhibit would be laden with interactive and more interactive and tech-savvy displays, as outside of the exhibit a video of Bjork was projected on an enormous wall that could be seen from almost every floor.

I visited one of the more permanent exhibits, on a floor dedicated to more traditional art forms, namely painting and sculpture. These floors had no interactive screens, but instead had audio tours publicly available on what looked like an iPhone. I noticed that the overwhelming majority of people did not use these audio devices, but the few that did tended to be older. People generally relied on art labels or were simply at the museum to view art and not necessarily learn about it. The galleries were very spacious and open, which allows the viewer to explore freely.

The layout of the gallery was unassuming, and I felt comfortable approaching the art. However, at times, I would have liked more information about pieces that the labels did not provide. I did not want to take an audio tour because I was interacting with friends in the exhibit, and in the moments when I felt confused or unsatisfied with the didactic labels, I thought an additional interface that allowed viewers to further explore information about the piece or artist would have been helpful.

(ex: not exactly sure what the message of this piece is, though I do find it visually appealing)

To juxtapose the permanent exhibit, I also visited a temporary exhibit on Hong Kong architecture, development, and expansion. The exhibits focus on modernity and the future was reflected in the widespread use of technology and interactive displays in the exhibit. The amount of technology used in a particular exhibit seemed to hinge on the period or type of art on display.

Unintended uses of the space by visitors:

One could also see visitors interacting with art in unexpected ways. Perhaps pieces of art can become interactive depending on relative positioning in a room.

-

Ethnographic Evaluation of The Metropolitan Museum of Art in New York

I was fortunate to have the opportunity to spend a few days in New York over break, where I visited The Met.

Upon entering the space, it was evident how broad the audience and museum goers was. There appeared to be a great deal of diversity, while this could be surface level, it would be interesting to have a more detailed breakdown on the socioeconomic and educational background of the museum goers. I appreciated that at The Met, you are not required to pay a set fee, there are suggestions on the price that one should pay, but the individual has the power to chose what they want to pay for an entrance fee, whether than be $1 or $500, I believe this removes a large barrier that potential museum goers may worry about, it is considerate to ranges of economic statuses.

In regards to the technology in the space, I was very underwhelmed. Apart from text and arrangments of materials in varying physical manners, there were hardly any ways to engage with the material in a digital manner. The Met does have a mobil app, it lists the various exhibits, events for the day, and news regarding the museum. It has a very pretty interface, but the information was not transformed into anything valuable for me quick enough.There was an audio tour offered for around $7 and around the museum there were very few people making use of it. I am not a fan of audio tours for several reasons. I believe museum going can be largely social, and such social interactions can faciliate greater depth of understanding and engagement with the art, audio tours do not allow for social interactions, but rather restrict it completely. Additionally, with audio tours, I find that the user is prompted too quickly on what to think on particular works of art, rather than having an opportunity to explore and engage with the art. I would of have liked to see digital displays and interactive text fields. Throughout the museum the descriptions of the works were very vanilla and traditional, just text written on plaque on a wall next to the piece. Even the displays were dull and not visually appealing. This was very frustrating to me.

While I was looking at the various period rooms in the museum I envisioned a more exciting outlook on the space. The concept of preservation and museumification of spaces is odd to me. I like to think of the original functionality of a space and the individuals who interacted with the space on an everyday basis. Having furniture in a room, while it conveys interior architecture and design history to me, the purpose of the room isn’t always clear. I dreamt of having moving holograms of the people who once resided, worked, and interacted in the spaces being displayed. The holograms would be digital representations and extensions of the space. While some might find it distracting, I would greatly enjoy experimenting with interactive elements.Below is an image of one of the period rooms in The Met. It is stunning visually, but the effect is not longlasting and the majority of museum goers do not interact with the space at a deep level.

On another note, I was taken back in the best way possible by the stunning interior and exterior architecture of the museum building. It is large, open, and clean, as a result very welcoming. The clean space made me feel very comfortable and safe. For the amount of people at the museum I did not feel compressed, I was thankful for the open space and high ceilings, I believe it is an incredibly important feature of the museum. Additionally, the works are not cramped, they are nicely space out, as can be seen from the images, this helps give indivdiuls some privacy and sense of being while observing the works. Individuals should never feel pressured to move quickly through a museum, and I believe large crowds can have such an effect and can create great discomfort. While the interior and exterior are largely different, they both convey the grandness and importance of the building. The exterior has a large set of stairs that in conjuction with its locations draws attention to pedestraians and those transiting through the space.

While I didn’t have an opportunity to visit all of the exhibits in the museum, I felt as if I saw a large amount of the museum. Below are photos of my favorite works and gallerys from the museum, I hope from these images it is clear how there is no medium provided in the galleries to interact with the works apart from text. This is a large contrast to other museums I have visited, where museum goers can interact with the works, such as MoMA.

This museums houses a wide range of works. While the majority are not modern, I believe one should still have the opportunity to engage in a digital manner with the works, whether this digital engagement be limited to the app, offered through touch screen interactions and games in the galleries, it is missing something. There could even simply be QR codes located in the descriptions of select works, providing an opportunity to engage with the art deeper.

-

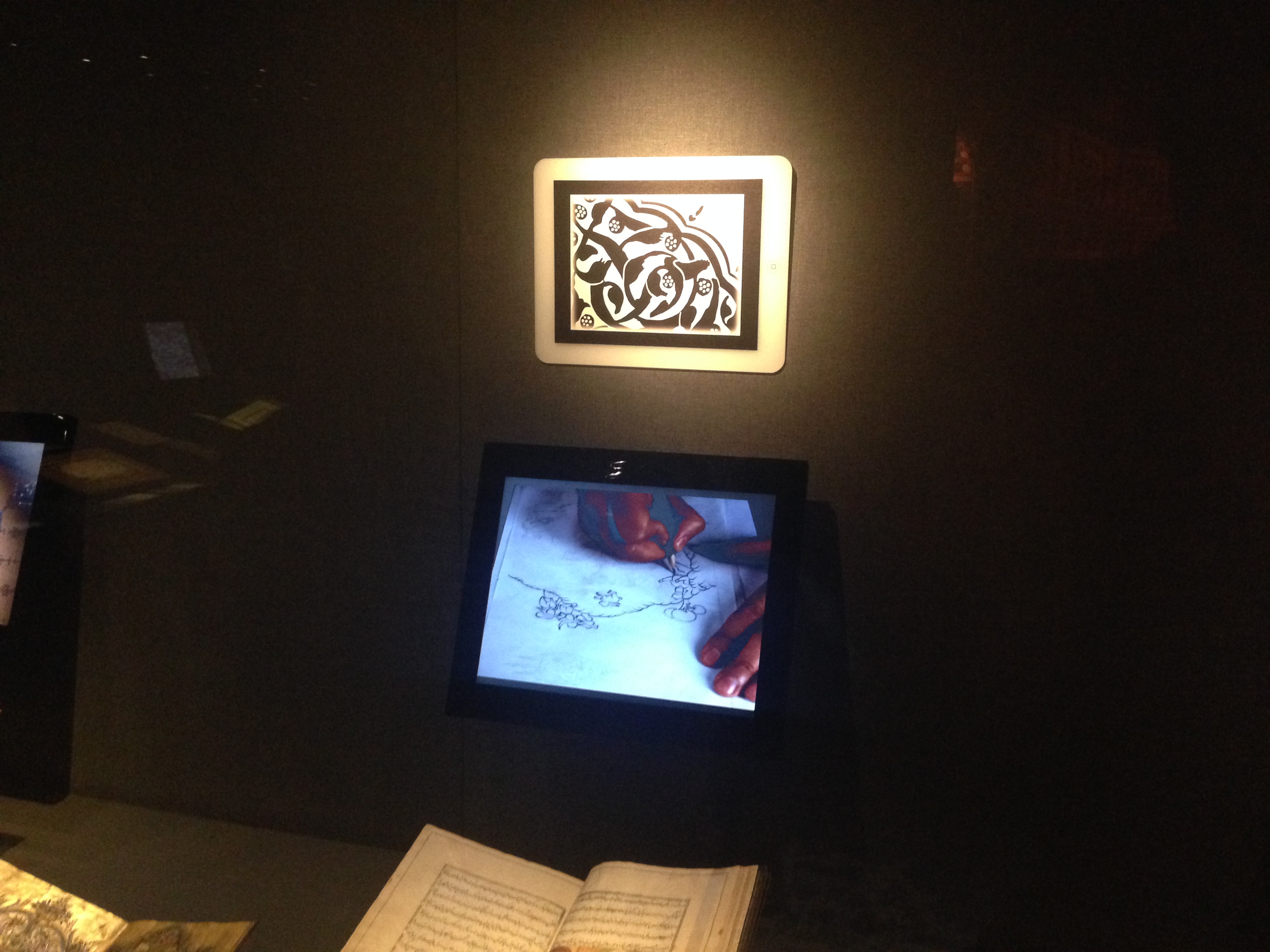

An Ethnography of the Sakip Sabanci Museum

Introduction & Context

Having spent spring break in Istanbul, I visited the Sakip Sabanci Museum- a privately owned villa-turned-fine-arts-museum (a la Getty Villa). The SSM is well known in Turkey for hosting retrospective exhibitions on world-renowned artists like Salvador Dali, Rodin and Joseph Beuys. However, the permanent collection of the SSM is the home for a large collection of Islamic calligraphy art. This ethnography focuses on this collection and its display. I had the privilege to tour the collection with one of the curators, who talked me through the museums target demographic, and how they wish to expand it by incorporating different ways of information access. The museum is experimenting with various new methods – some I consider to be more effective than others.

Demographic

The Islamic calligraphy collection is somewhat of a niche interest area, and is not directly accessible for a broad audience. Additionally most of the art is in the form of old books (Quran’s etc.) that need to be well preserved and can only have one page open at any given time behind a glass display. The SSM came up with various interesting methods to overcome these challenges, and make the presented knowledge as accessible as possible. Additionally, there was a focus on making the museum interesting for children and modern art enthusiasts by introducing interactive technologies and parallels to contemporary works.

The Mobile App – A Great Way to Exhibit Books

The SSM developed a great iPad app that aims to augment the museum experience with digital expansion of the physically presented information. Acknowledging the novelty of these mobile technologies, the museum provides you with the option of renting an iPad with the app to use throughout your tour experience. This can be seen as a modern take on the outdated audio-tour option. Throughout the exhibit there are markers (similar to the one below) indicating you to point your camera while the app is running:

The app recognizes the unique pattern of this marker, and redirects the user to various interactive interfaces (e.g. photo libraries, text, scans). An example application can be seen at the lobby of the museum, which used to be a living room. A pattern here takes the user to a photo library of the late owner of the villa, Sakip Sabanci, hosting various celebrities and historical figures in this living space:

But I believe the greatest impact this technology has is in its use for exhibiting books. Scanning patterns near exhibited calligraphy books redirects the user to complete scans of all the books behind the glass display. The user can pick from many books, skim through the pages, and experience the dense text as a whole rather than being limited to the open page behind the glass. This is also an amazing resource for research purposes, which is why the museum has their whole scanned collection available online.

Image of a digital book

Image of a digital bookAn Interactive Table for Kids

The museum also experimented with an interactive table and large display. This installation was primarily targeting younger visitors. The table contains many “eggs” that a user can manipulate and “throw” onto the large display showing an image of the Bosphorus. The eggs then crack, and the contents ( e.g. a calligraphic boat, or bird) move around on the Bosphorus before they disappear. Although the execution was impressive, I personally found this display somewhat gimmicky, as it had no goal of conveying any form of information to the kids. Rather it seemed like its purpose was to just entertain kids that might otherwise be bored of the exhibit to this point:

A Modern Take on Calligraphy by Kutlug Ataman

The calligraphy exhibition ends with a contemporary digital artwork that reinterprets the notion of making images out of text. In this work, the artist Kutlug Ataman overlays two videos of the Bosphorus water taken from the same location at two different times of day, typing the traditional call for prayer in Arabic with one video over the other. This “dynamic” calligraphy is connected with early traces of expressionist works from Ottoman art history. The incorporation of this somewhat out-of-place artwork acts as a great gateway for contemporary art enthusiasts to engage with work that is otherwise outside of their areas of interest.

-

Boston Museum of Science - Ethnography

NOTE: I notice that Karleigh also went to the Museum of Science and discussed some topics (ex. demographic, types of technology) in her post. So, in order to avoid repeating information, I will discuss the interior/exterior of the museum, an unexpected behavior, one possible frustration with the museum, and provide more examples of technology.

Interior/Exterior

Although the exterior of the building and the lobby (ticketing area + information booth) are quite typical, the museum distinguishes itself with a large T-Rex outside and a Rube Goldberg Machine inside.

A T-Rex is outside. (source: http://www.afterhood.com/wp-content/uploads/2011/07/Science-Museum-6-11-7-01.jpg)

Rube Goldberg machine in lobby.

Unexpected Behavior

Interestingly, attendees tended to flock to displays which were mechanical, rather than purely digital. The novelty of the mechanical displays were the main attraction.

This screen in the food exhibit was abandoned for a long time.

I had to queue for several minutes before photographing the popular magnetic canvases.

Possible Frustration

While the museum captivates attendees and encourages interaction (ex. footrace between children), many of the attendees, adults included, did not pay attention to the informational content.

The audio tour was rarely used, and the sheer number of displays caused attendees to avoid reading informational boards, and instead move on to play with the next exhibit.

Children ran along this in this sensor-filled racetrack, but few read the information.

Many exhibits have audio tours, but I only saw two attendees use them.

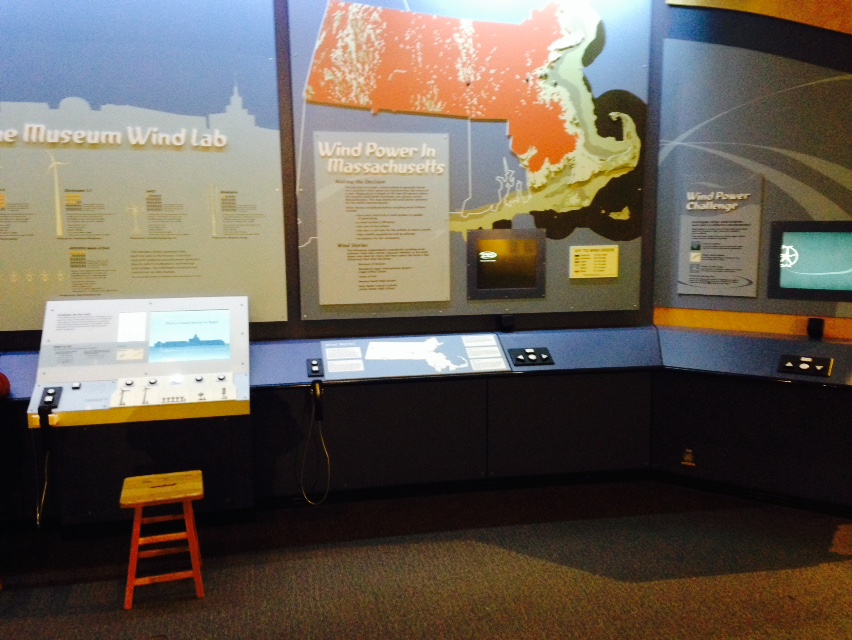

In the wind exhibit, childen would play with the interactive display and run off before listening/reading the science.

Conclusion

The interior/exterior of the museum are typical, with a few distinguishing features. The exhibit halls themselves were highly unique and well decorated. The mechanical displays were very popular, but they were so widespread that attendees rarely read the science.

More Technology

###Mechanical

Artifical duststorm.

Tubes containing scents.

###Digital

Robotic bee.

Multi-screen aquarium.

Many displays used a computer and a few buttons.

-

[MuGo]- Simplifying Your Museum Visit

Project Title: [MuGo] Museum Trip Planner;”Easy, Breezy, Art”-MuGo

Problem: Often times you go out of your way to visit a museum, one may not be familiar with the area, amenities, and services offered by the museum. In fact, people may chose not to go to a museum because they feel like they will encounter inconveniences such as transportation, weather, and food issues. Another issue, is that people often feel that the information displayed in a museum is removed from one’s common knowledge and they may feel overwhelmed going to a museum. While there are applications that can provide such information, we aim to provide such information in a compiled, helpful manner.

Approach: We would like to streamline and concentrate the information in an interface that is easy to navigate and user friendly. One would be able to chose a museum and the date in which they hope to visit the museum. In response, the interface will display the weather for the day, tour times, museum events, transportation routes and travel time, and ratings of local restaurants and coffee shops near the museum. This way museum visits become less daunting and more feasible to the general public.

Audience: Our app targets a broad audience. We imagine our persona anywhere from young adult onwards. We hope that socioeconomic status will not be a limiting factor, however, our persona is most likely a more educated group;those who want to frequent museums, but don’t have time to plan out trips. Additionally, this app would prove useful to tourists and new residents of a given area.

Skills Needed: Front end developer, Minor Back End Developer, UI/UX designer, content development

-

Site Visit to the Museum of Science

The Boston Museum of Science