Commentary

-

Assignment 22

Reading commentary for Mike Featherstone’s 2006 overview of the history and the nature of the “Archive”

In the reading, Mike Featherstone talks about the history and nature of the archive as a cultural institution. The archive could be traced back to ancient civilizations, where people used it to preserve and organize historical documents and records.

The article also explores the development of the modern archive, which emerged in the 19th and 20th centuries as a way of organizing and preserving cultural artifacts and documents. Mike argues that the archive not only played a key role in shaping our understanding of the past and our collective memory but also was used to exert power and control over history.

The concept of “archive fever” is also interesting, which refers to the desire to collect and preserve cultural artifacts and documents to preserve the past. There are deeper psychological and cultural motivations behind the desire. The author argues that this desire was driven by a sense of loss and a need to hold onto the past but also highlights the archive’s potential dangers, such as distortion and manipulation of history.

Reading commentary for Wolfgang Ernst’s “Dynamic Media Memories” in chapter 4 “Archives in Transition”.

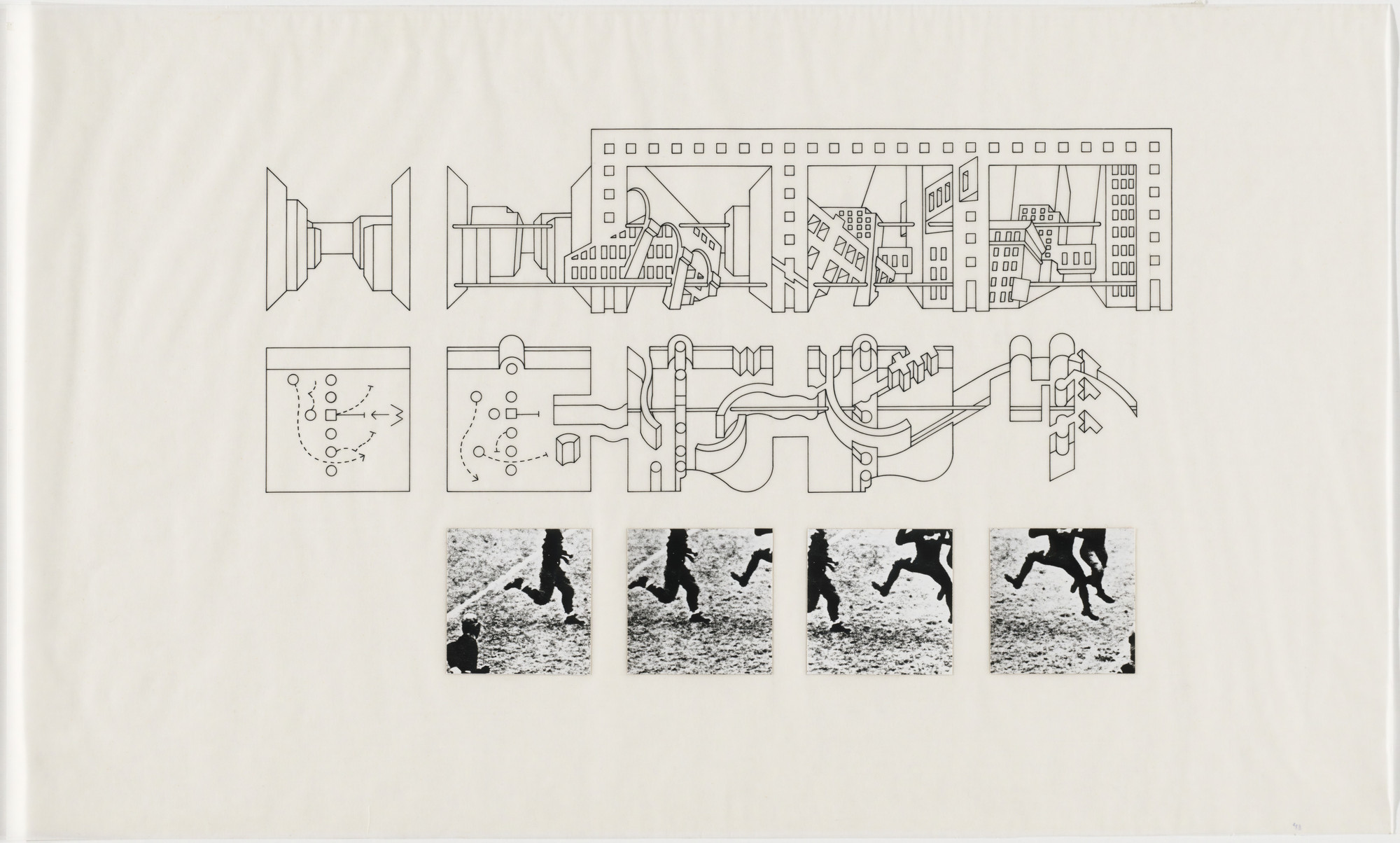

This reading discusses the changing nature of the archive in the digital age. The author argues that the traditional concept of the archive as a static and fixed collection of documents and artifacts is no longer relevant, as the proliferation of digital media has led to a more dynamic and fluid understanding of memory.

Ernst points out that the digital archive is not simply a collection of digital documents but is also shaped by the technologies and processes that are used to create, access, and preserve these documents. He discusses software and algorithms’ role in shaping how we access and interpret digital information.

The author also touches on the concept of differential archives, which are self-learning and adaptable to their respective media formats, and the role of cognition in the creation of memory. Ernst also points out the importance of media-specific archival practices and the need to abandon the storage metaphor in favor of a model of memory as a network.

Reading commentary for chapter 3 “Underway to the Dual System” by Wolfgang Ernst.

The author discusses the concept of a digital archive and how it differs from classical archives. Traditional archives are based on storing physical items, such as documents or artifacts, and rely on classification systems to organize and access them. In contrast, digital archives are generated on demand and are accessed online through networked computers. Using agents and filters allows users to create and shape the content of the digital archive, making it a generative and participative form of archival reading. The author also discusses the challenges of archiving processual works, such as born-digital media art, and the need for art and archival language to be developed for these types of assignments. The digital archive operates at a micro-temporal level and relies on the archiving of source codes, rather than fixed data blocks, to regenerate new copies of algorithmic objects. The author also explores the mathematical basis of the digital archive and the potential for it to be used as a tool for memory production.

-

Assignment 22

Featherstone’s Archive Paper The bounds for what is considered an archive are widely expanding with the addition of internet storage mediums. It is to the degree that I didn’t even know that archives was a government defined entity, as Featherstone described it, as I thought it was just a means of organized storage. The transition of archives from physical to digital can be an active parallel to the transition of archives after smaller nations ceded archives to larger nations due to war. The same arguments, such as the rights to determine what remains in the archive as well as who has control over determining that, can be applied to the internet from physical archive debate. I did not know that archives were intrinsically political entities, originally utilized to categorize populational data. But it is no wonder as to why the debate on what information remains in an archive and what gets removed stemmed from a transition over to what type of information is contained within the archive. In terms of population data, one has no reason to debate whether to remove someone’s information from the registry in an archive.

Wolfgang Ernst Dynamic Media Memories The concept of an archive has drastically changed from a fixed storage entity to a constantly changing entity, due to the hardware used to write and store the information. This is an incredible insight, especially if someone is not familiar with the flaws of electronics, as devices change and break down over time. But with the logic he uses, although the paper is more durable than a single storage unit, it also breaks down over time, so does that mean the concept of archives has always been a constantly changed entity? Or is the concept of time by the user in play when someone identifies an object as fixed/stationary, and another defines it as changing? Archives become just a transfer of data through the advent of the internet? I don’t know how much I can agree with this notion. Although the speed at which one grabs data from storage is incredibly decreased, the process of searching for the data still exists nonetheless.

-

Assignment 20

Kirschenbaum, “So the Colors Cover the Wires” The interface is framed as the most crucial part when designing a product that requires end users to view with the commentary. This insight is especially pervasive as he is targeting regular digital humanities researchers attempting to abide by specific deadlines, targeting us specifically, to which they focus on data collection rather than the end product for the user. I remember coming across apps that I deemed unusable, because the interface was too old or unattractive, but I do not think I made the connection as to work it would take to customize an appealing interface. The commentary also displays irony, as most of the interfaces the writer deems as great interfaces, I would not show the same appreciation. This means that there is also a time aspect to developing interfaces, as the more advanced the technological age is, the more the need to be constantly upgraded within the design to appeal to the user persists.

-

Assignment 18

Hearst: What is Text Mining? Hearst makes a distinction between true text mining and current text mining, in which true text mining is able to discern unknown patterns and interpret context within articles, while current text mining is very similar to data mining, only garnering known facts about the article (frequency of words etc).

Contrast between Text Mining over 20 year span Text mining defined with Hearst: Untangling Text Data Mining was still frequency and pair based mining, in which algorithms only looked for specific words within the data and presented the information back to the user. Although this is conducted in texts, the level of sophistication that these algorithms employ was on the same level as data mining. There was little to no use within the humanities field, as it was still largely fact based. Looking at Binder’s article current day, the field of text mining has made incredible strides, as the algorithms are able to connect sentiments towards specific fields of text with a respectable accuracy. This new version of text mining now has the potential to be used for humanities analysis, and is also a step closer to interpreting the context of specific text without human intervention. It is interesting looking at the progress of the field over the course of decades, but I am still a little skeptical about whether text mining would be able to interpret context on the level of a person.

-

Reading responses to Featherstone & Ernst (Data Archives)

Reading responses to final course readings:

Featherstone’s overview on the nature of the archive

- Archives are for storage, but they are also for access.

- This text connects to our Memes of Resistance project, stating clearly that archives are also necessary for the documentation, and remembrance, of uneven power struggles and surveillance activities. But those who control the archives control the remembrance. That archives, as they were originally conceived, were to be kept secret is worth noting. Archives were also conceived of as playing a role in regulation and oversight of populations: “From the perspective of the emerging European nation-states as they became drawn together and then locked into a globalized power struggle, the construction of archives can be seen as furthering governmentality and the regulation of internal and colonial populations, as well as providing foreign policy information about the strategies and globalizing ambitions of rivals.” More nefariously, this led to an individuation process, whereby “people’s characteristics were observed, recorded and stored in the files,” which is perhaps most common today in oppressive regimes. However, this is exactly what’s happening over the Internet–many of our behaviors, including and perhaps especially our purchase behaviors, are known more by companies than by ourselves. The issue of classifying and selecting data is, in today’s case, extreme, as is the issue of data ownership, which did not originally exist in the case of many historical archives.

- I particularly enjoy the insight of “‘archive reason [as] a form of reason that is devoted to the detail’ (Osborne, 1999: 58). Yet it is clear that the archivist’s gaze depends upon an aesthetics of perception, a discriminating gaze, through which an event can be isolated out of the mass of detail and accorded significance.”

- Now I have to read “Funes, his memory.” This story sounds fantastic.

- I agree with Foucault’s formulation of the archive as archaeology, viewing it as a humanized accounting system in which discourses are created from given data.

Ernst’s “Dynamic Media Memories” | Chapter 4 in “Archives in Transition”

- It’s telling that German public broadcast services view archives as “production archives,” compelling us to recycle archived information and keep it in use.

- Digital archives are co-produced by users for their own purposes.

- The ability to memorize is now automatic for digital systems, but recall still requires resources.

- “The shift from emphatic cultural memory (which is oriented toward eternity) to intermediary media” implies a sort of “Eye of Sauron”–a shift in focus as cultural memory dissolves and intermediary interests take root. Perhaps time, today, seems to move faster because our memory is simply shorter, as we offload memory tasks to computational agents that curate information to us in ways that humans never would in a technology desert.

Ernst’s “Underway to the Dual System”

- This section expands upon observations of the last, integrating our understandings of generative data and memory manipulation through recall.

- Specificity of search and recall is paramount in the digital archive: “The digital archive has no intrinsic macrotemporal index, as the “year 2000” problem made clear. It operates at a microtemporal level instead.” Likewise, their existence emerges through recall functions: “Algorithmic objects are objects that always come into being anew and processually; they do not exist as fixed data blocks.”

- References becoming “self-aware” is a unique and curious concept. Hyperlinks allow us to embed and access data that we would never be able to embed and access in the “real world,” such that they take on new meaning and engagement capabilities. Paired with the fact that algorithms allow us to construct new data through faceted affordances, this paradigm makes for a novel way of creating, in a sense, our own custom views of reality. This is both worthwhile and dangerous, in the case of archives, since this customization can do away with important nuance, improperly focus on details that are context-dependent, and inauthentically allow individuals with archive control permissions to dictate the scope of public data access and, as a result, public knowledge and discourse channels.

-

Featherstone 2006 Joshua Mbogo

A New Post

Its was interseting to see how the way that digital and traditional archives were talked about. I think what stood out to me the most was the how digital archives have become more like data and more data has been recorded because the type of archive is decentralized. This decentralization is the opposite of the traditional archive which existed as a map or correlation of data that was put together by the librarian / archivist. The reason for a map is because users can’t just look up an archive they must have an understanding of how things are connected. This map allows for an understanding of the data but it also makes traditional archives more static than digital which can be mapped in many different ways because it can be filtered and represented in many different ways.

Enter text in Markdown. Use the toolbar above, or click the ? button for formatting help.

-

Reading Assignment 22 - Qingyu Cai

Mike Featherstone’s 2006 overview of the history and the nature of the “Archive” reminds me of the feature of data accessibility. There are inequalities in both data and archives, strongly affected by power and right. The questions of who makes the history and the extent to which there should be wider access to those who seek to search out counter-histories remind us of the correctness and origin of archives. Less powerful nations are also less potent in maintaining their own archives. For instance, nowadays, we can see that in some countries’ museums, some archives and preservations originated from other countries. How and why are they here instead of in their mother countries? Furthermore, some archives can also be destroyed for various reasons, such as wars, natural disasters, artificial damages, etc. The archive itself can not only be regarded as a form of documentation but also a process of history. It is critical to look into what is documented as the archive’s content, while it is another interesting story to view the change of the archive itself as the research object. The second takeaway from this week’s readings is about archiving media art. How to archive media art exists a series of questions worth discussing. The feature of media art determines the answer to the question of how to archive media art. Current solutions include objects, pictures, and artifacts, while electronic communication is based on time. The archive forms of media art cannot reflect in-time communication and resist archiving. Besides, some media arts are instantaneous art, and do they need archiving? So, the main questions for archiving media art include the archiving forms and the necessity of doing so.

-

Assignment 22- Sally Chen

1)“Archive” The article discussed the origins of Archive and how it has changed as digitalization has evolved. As mentioned in some of the earlier readings in the semester, there is a lot of discussions about how to archive website data or dynamic media data. Who has the right to organize the archive? Who will have access to read or edit these archive? What kind of media should be archived? Because the owners or the organizers of the archives have consciously or unconsciously included their bias to the archiving process, the history or reality reflected or represented by the archives will always be partial and subjective. Therefore, we should emphasize the diversity of archive creators. With different archives coming from people who look at the society from different perspectives, we are more likely to have a “whole” representation of reality.

2)“Dynamic Media Memories” Compared to traditional static media, the advantage of dynamic media is that it is more flexible for extraction and editing. One limitation of dynamic media is how people decide whether the data flowing through media memories should get an “end of flow” to storage and even archive. For example, in TV media, formal TV programs such as news are usually recorded for storage, while most of the TV time is for advertisements. Should it be recorded through video recording data like news reports even though they are actually repeating themselves? Or should they be stored through counting numbers? What would be lost during the media transformation?

3) “Underway to the Dual System” The author illustrated the differences between traditional archives and digital media archives in many ways, such as the separation of storage and presenting in digital archives, the essential components of archived media (language letters or 0/1), and so on. Digital archives are based on software for presentation, which links data in hardware (0/1) and media stimulus for human perceptions(image, sound, text, etc.). Therefore, digital archives are generally unstable for individual users because they do not own the hardware that store the archives and the software that access the archive data and transform the mathematic components to media forms, but only have access to the presented media formats. It will lead to some challenges for the users in terms of archive transfer or advanced data processing. And for digital archives, their authority is challenged because the people who control the software and hardware essentially have power to decide what data can be archived and how it can be archived (what algorithms are used to process the 0/1 to produce the desired media data).

-

Assignment 22 Comment_Elva Si

This week’s readings broaden my understanding of what “archives” mean. In the traditional sense, archives can help to discover historical records and truths that have been hidden or lost. Based upon what are archived and how they are stored, future generations may come up with a completely different interpretation of the past compared with the reality or they may be limited to search out counter-histories.

Since archives have weaker classifications and greater amounts of material that is boxed or shelved under chronological or general headings, they provide more space and depth for interpretations. From a humanistic perspective, we can raise the question of who makes the history and what is missing from the history based on the available archives. We can also find connections among various archives speading across the global, including but not limited to keywords, images, linkages between space and time etc. Possibly, we can find priceless records and icons that have a high degree of contingency.

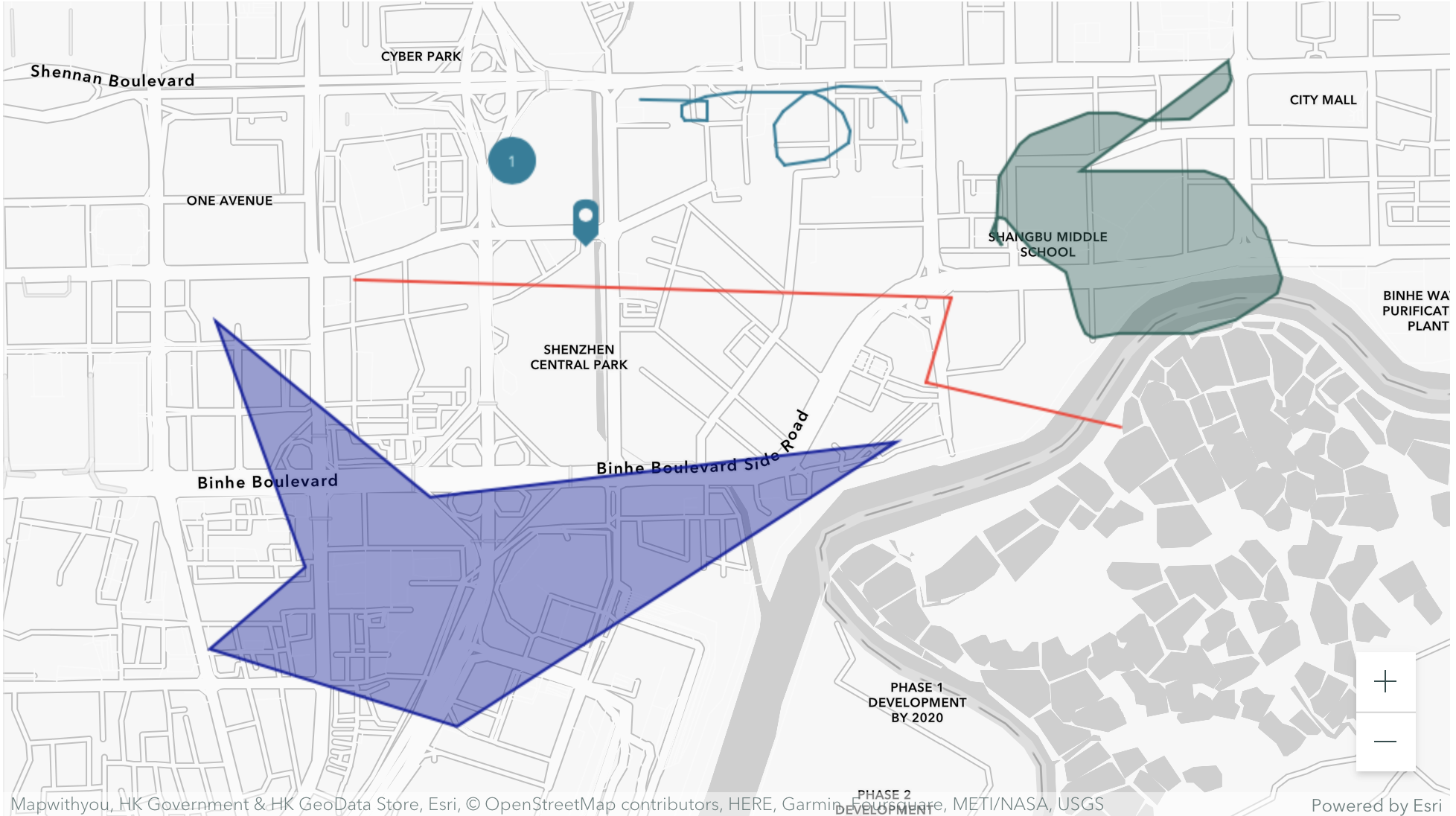

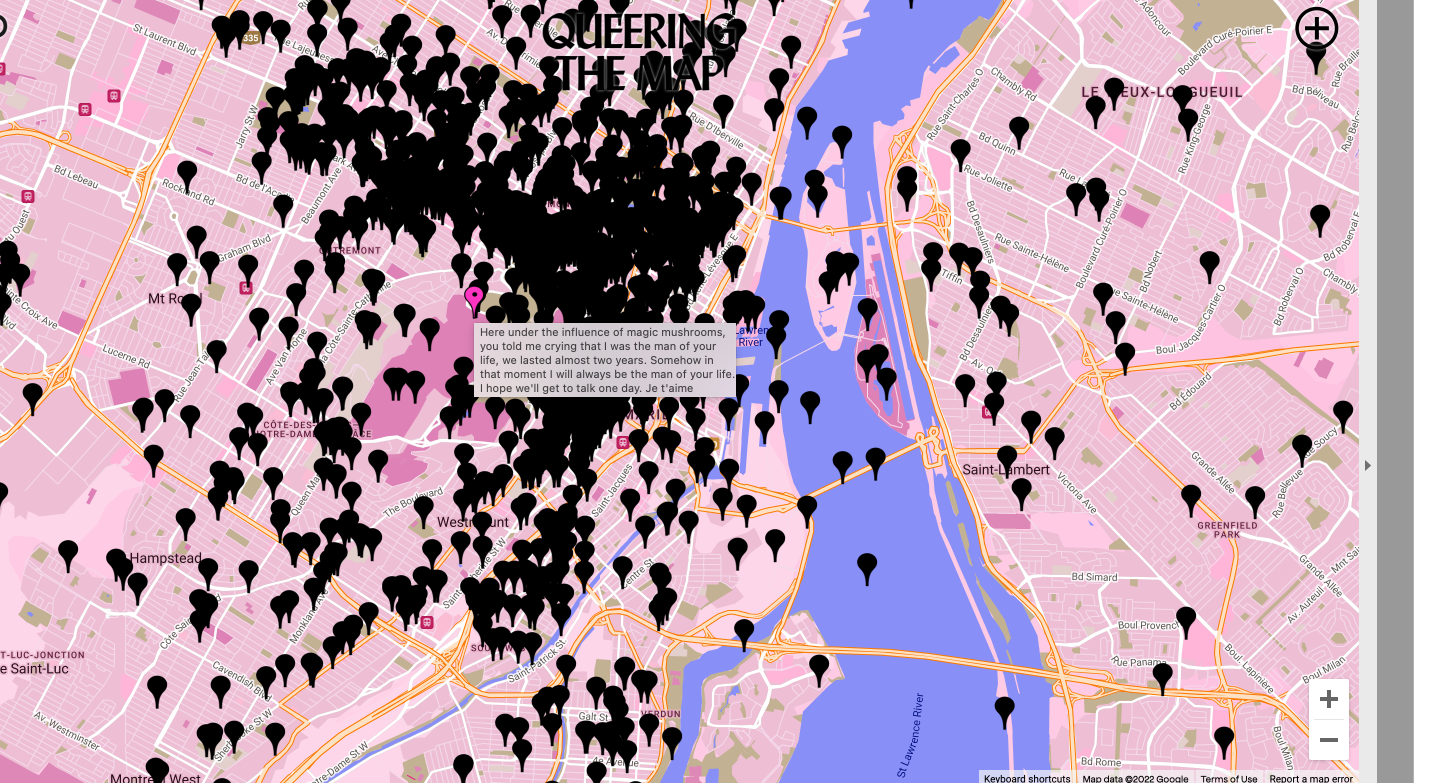

Archives could also serve a function that I did not realize before. They could be places for creating and re-working memory. For our final project, we are thinking about including closed LGBTQ+ places and events in the map. For example, Shanghai Pride Parade, LGBTQ Film Festival, Eddy’s Bar (Gay Bar), Lucca 390 (Gay Bar). These places and events are shut down due to government regulations or COVID influences. The archive in this map can be a powerful tool to support future generations memorizing the places’ and events’ existence and/or re-create something similar in the near future.

-

Assignment 22 Comments

Archive

In Featherstone’s article, he touches on many different points. One of the main points that I took away is that historically, archives have been the basis of forming a nation, that with these archives, one can build an ‘imagined community.’ However, this raises the question of how much should we archive, which correlates with who can view these records.

The archive is not just a collection of the past, but it is through this collection that the user can discover more about themselves by identifying with history.

Archives In Transtion

Today, with the immediate transfer of data, any present moment instantly becomes a memory. This really changes the way we view the archive. Instead of the archive following a static, chronological order, it’s information can be taken in and out, allowing us to shift back and forth in time.

-

Assginment 21_Xiaofan

The research proposed an innovative approach – a generative system to rethinking the data visualization process. It argues that the visualization of cultural archives is objective and biased in its traditional research process, as the researcher might prioritize certain viewpoints based on the socio-cultural conditions at that time. The generative approach would bridge the gaps in the archive process; it would also democratize the ‘sole decision-making power of the archives in the formation of cultural heritage.’

The sets of matrixes the author developed are enlightening in that it offers new perspectives when users choose different parameter combinations to explore a topic. The layering of information allows users to investigate further and understand the relevance of a multitude of information that doesn’t necessarily share the same metric unit.

This research paper also provides insights and inspiration for our project, the Dutch art website. We could also develop the idea of layering to create an interface that allows for the combination of a variety of information, with the visual representation of these contents through different space configurations, homogenous ones, and variable-dependent ones.

-

Takeaways from Max Frischknecht: Participatory Image Archives - Qingyu Cai

The idea of generative design serves as a tool for designers to create designs for interfaces based on use case scenarios. The matrix created can help to serve as a design framework, allowing the analysis and synthesis of functions, features, and categories. Besides, the scenarios created can serve as interface design guides, letting designers know what users seek and can explore using this interface. The generative design idea and the matrix lay a solid foundation for the interface design of dynamic visualization, which gives us a hint and guidance for our final project. We can also create a matrix to analyze what flows we will make and for whom we design. Currently, we have three main views of the homepage and several sub-categories of a single work display. It is the potential to create a matrix for different personas to test the completeness of our design in terms of both functions and details.

-

Max Frischknecht’s writing on Digital Archives

The greatest take-away from this piece is that “the visualization of cultural collections… shouldn’t aim at reproducing and consolidating the hegemonic claims of archives, but rather invite the viewer to critically question what is being viewed and the circumstances of its creation.” In our project, where we evaluate memes of resistance deployed during the Russian Invasion of Ukraine, it’s important for us, as well as our audiences, to consider the position of these memes within culture, and to question them accordingly. Who created them? What were their intentions? Were the memes targeting someone or something, and what kind of effect were they trying to have on audiences (who, like ours, will be viewing them in similarly social environments)?

As Frischknecht argues, our data visualizations may be considered generative in some respects (they are also participatory, as designed), but they are significantly limited. We supply certain conditions (e.g., data) and ask a program to generate an illustration of those data for us. Then, we might place new restrictions on it (e.g., altered color schemes) to seek new ways of visualizing the information, but the system carries these tasks out based on its own design functionalities.

The author’s examples of visualization types based on intended emphasis (of space, as a map, and time, as a timeline) are particularly relevant to our work. The latter visualization may be helpful, for us, in showing how meme content changes over time across different subreddits when selecting for particular content facets (e.g., references to violence, references to politicians). We could also show concentration of meme facets at a single time point using a map.

-

Generative Design - Joshua Mbogo

A New Post

I think it is important to think about the importance of what you are trying to get out of the data and what you are actually trying to visualize. The article makes a distinction between visualizations that are trying to show data versus a process and how that might change how the data is represented and interpreted. This article continues to emphasize context and how it not only grounds a digital humanities project but idetinifies and controls the project to a significant degree.

Enter text in Markdown. Use the toolbar above, or click the ? button for formatting help.

-

Comments on Max Frischknecht (University of Bern) Thesis

The framework of Max Frischkent’s research on generative interfaces is enlightening in regards to questioning the objectivity of certain kinds of data, specifically archival data, which represents pieces of information that were selectively catered and chosen from a wider dataset. Just like what was previously discussed within prior works for DIgital Humanities, data is inherently objective structures that are selected subjectively to maintain an argument, and when presented in visualizations, add an extra layer of subjectivity as the data is framed in a particular way. To create an interface that allows the user to understand and interact with the process in analyzing data, provides an additional layer to the archives for individuals with no prior knowledge of the subject matter to actively participate with and analyze the data. By doing so, Max’s research contributes to a characteristic that is needed in most fields, even outside of DIgital Humanities, a need to lower the barrier of entry to understanding the origins of relevant data.

-

Assignment 21_Elva Si

The visualization of cultural collections, shouldn’t aim at reproducing and consolidating the hegemonic claims of archives, but rather invite the viewer to critically question what is being viewed and the circumstances of its creation.

Frischknecht brought up an unique and essential approach to consider archives in a humanistic setting. As data is capta, digital archives should be capta, of which could be recording of natural phenomena and/or subjective perspectives. I really like the idea of asking users to participate into archiving cultural heritage collections since it could reduce the “sole decision-making power of what should be archived. Inviting and incorporating people’s views and practices, especially those from cultural minority groups could benefit the collection of what would be passed to future generations. The matrix generator is effective and straightforward for users to identify the goals, aspects, concepts, and granularity for archives. I think we could create something similar in our interactive map to guide users contribute to the location/event information, as well as archiving past/closed places to record their memories or views.

-

Assignment 21- reading- Sally Chen

I think Generative Design can help generate the visualization that the user wants - by choosing different variants to limit the type of presented data and the way it is presented for the purpose of illustrating a problem or supporting a point of view, etc. This design framework is more effective when data types are abundant and “complete” as users can obtain outcomes in all combinations of variants. In addition to being able to quickly and easily acquire visualizations, I think another advantage of using the generative design is that it can facilitate users to explore previously unnoticed visualization models that may be valuable. For example, the Task classification in matrix 1 may facilitate designers to consider more tasks that users can do with the interface. Overall, as the demand for visualization of digital archives rises in academia and the mass, it is significantly meaningful to develop such a generative design. On the one hand, it can help scholars to explore and visualize archive data initially without long coding time. On the other hand, this design can be applied in exhibitions or museums which are facing the general public to present historical perspectives. Since our project is a map-based visualization, I think the design matrixes in the article can help us to figure out the way we want to present our data and what kinds of content should be primary and which ones are secondary during our brainstorming phase. The brainstorming process based on the design matrixes needs to consider how to integrate the different kinds of visualization into one interface in an effective and simple way while taking into account the user experience.

-

Assignment 21

Commentary on Max’s research

From what I gathered after reading Max’s research summary, through generative design we can continue to input a different number of design approaches, each with different values or requirements, until we find the most effective solution. In Max’s case, he hopes to find the best way to visualize data surrounding cultural characteristics through his matrices which give him different possibilites of visualization, and through visualizing this data, he can answer or create tools that help to answer questions of the past.

Relevance for our project

For our project, we are aiming to show how design of certain objects has played out over time. Our visualization type is our timeline, but there may be different visualization variants that more effectively answer questions on our topic.

-

So the Colors Cover the Wires - Joshua Mbogo

A New Post

I think a very interesting thing that is discussed is the meaning of interface. Many times people associate the word with a spatial construct but this writing touches how this doesn’t have to be the case. It highlights how often we work with interfaces in our daily lives with the example of doorknobs and house surfaces in general. In a broader sense this piece enlightens the reader of how everything in our reality is an interface from our own eyes to our ability to feel and interact with haptic devices. The goal of discussing these types of things is to improve and expand on the types of interfacese we use when building devices and experiences for users. Currently in the modern world we have become very familiar with Graphical User Interfaces (GUI) and very recently haptic interfaces but as we increasingly peer into the future and the discover the technologies that lye their we also begin to peer into new and exciting interfaces to interact with the modern world.

Enter text in Markdown. Use the toolbar above, or click the ? button for formatting help.

-

So the Colors Cover the Wires Commentary

comment on the aspects that have changed your understanding of interfaces, especially the GUI, and what kind of impact they might have on your final project.

I really liked what was discussed in the section titled “The Artist of the Beautiful.” I feel that naturally I’m more drawn to a more beautiful interface, but I also feel like I’ve alway appreciated art and design a lot, so I thought that this made sense. However, I learned that having a beautiful interface is so important for a software because its technology is so complex. Because we want to separate ourselves from the computer and what’s actually going on behind the scenes, having a beautiful interface is so important to do so.

-

So the Colors Cover the Wire - Assignment 20

If there is a lesson here for the digital humanities it is simply this: just as interface cannot – finally – be decoupled from functionality, neither can aesthetics be decoupled from interface.

I find this quote intriguing, as the two go hand in hand when it comes to user interface. However, which is more important? The functionality or the aesthetic part? I think one can argue that if one isn’t drawn to the interface aesthetically itself, then it’s not a good interface. Even though it’s functional, it might be harder for the user to navigate through it if it’s not organized neatly or doesn’t look good, making the app really “unbearable” to use. So at what point do you sacrifice functionality for aesthetics, or vice versa? Either way, it feels like you can’t have a good application user interface if you don’t have both.

User interface is especially important since our project is based on conveying informationthrough a timeline, so having an accesible and aesthetically pleasing interface is crucial. But like the above principle, we meed to maintain the functionality of our project. We are going to try to draft up ideas to find out the best way to convey information while making the app enjoyable to use.

-

Kirschenbaum's "So the Colors Cover the Wires"

There is a massive array of interface types, and we interact with many of them every day. The touchpad on a laptop, a remote control, a touch screen on our phones, are all interfaces, allowing us to interact with something “under the hood” that we could not interact with directly. Ultimately, however, the interface is an intermediary. It gets between us and the data we’re hoping to affect. In any given situation, there may be a dozen or more “layers” of what one could call an interface.

As illustrated in examples here (e.g., Nelson’s “the last machine with moving parts”), interfaces determine what can be done in the data world (as I’m calling it–the world in which the data sits and can be manipulated by users through interaction with an interface).

I haven’t before thought about the idea of “beauty,” specifically, in interface design. Elegance, simplicity, and clarity are what most come to mind when I consider the necessities of interface architecture. But beauty, and Gelernter’s “deep beauty,” seem particularly useful in distinguishing functional interface forms.

I also haven’t been exposed to the concept of a “contact surface,” but have done work previously examining book pages, and other physical textual surfaces, as interfaces. It’s true that these interfaces possess essential information experience capacities, not least of all because of the availability of additional tools (e.g., pens, pencils, paperclips) that allow us to augment the physical interface for our unique needs and, in some cases, in ways that go beyond the capacity of most digital tools for text analysis and annotation, in large part due to the limitations of digital interfaces for textual interaction.

Regarding our final project: there are several useful insights from this work. First, we can consider alternative interface schemes; we’ve been considering, primarily, a desktop interface, but what about a screen interface within a museum setting? What about an augmented reality interface that allows us to visualize memes in physical space as we see something different on a monitor, or in another physical space? Second, we can consider streamlined design elements (more “beautiful” designs) to enable cleaner user interactions (for now, our interface is a bit clunky, and burdened with too many features for too small a space, leaving it overly complex and burdensome for inexperienced users). Third, and considering archival interfaces: there are many other options for the presentation of “bulk” data, such as memes of resistance, that we can explore, including more search-based mechanisms and those that focus on a single display element “at a time”, rather than–as we are currently doing–flooding a single interface with too many different types of data.

-

Assignment 20

“So the Colors Cover the Wires”: Interface, Aesthetics, and Usability by Matthew G. Kirschenbaum

In the article, Kirschenbaum commented on how ‘the visual (and aural, or tactile and olfactory) elements on the page or screen function as integral aspects of the information experience rather than as afterthoughts to some “pre-existing bundle of functionality”.’ Kirschenbaum further points out that the debate on interface transparency mirrors the debate in literary studies over the nature of a book’s ‘contact surface’ and the role of its physical and material features in the production of the meaning of a text.

Aesthetic

The success of Apple is hinged heavily on its product design. While most software and websites have pragmatic or functional ends, Apple’s OS X interface encourages users to cultivate an aesthetic sensibility even in the most mundane corners of the desktop. The clean and minimalist interface only keeps what is necessary and reduces the complexity, making it distinct from other competing products.

Some questions to consider: How do we define an interface to be aesthetic? What kind of aesthetic are we pursuing in interface design? What role should aesthetics have in interface design? Should beauty in interface design play a complementary role in enhancing functionality? Or should it be the drive for developers to rethink the content and create new experiences for users to interact with it? How do we balance the competing demands of function and beauty?’ How will the design balance efficiency, ease of use, and user agency?

Usability

In the last section ‘Blake Archive for Humans’, the author also talked about the competing demands of humans and machines and how it is hard to strike a balance in interface design to meet various users’ needs. This also applies to our final project where our Dutch Art website design also has different interface design for various needs: a mindmap interface for users who want to explore more deeply the relationship between artworks; a gallery interface for users who just want to browse without specific aim; as well as a map-based interface for users who want to check nearby exhibits and locations for certain artworks.

Will the users who wish to engage more deeply be permitted to trace the wires to their source? Or will the interface enforce a clean line between who’s in and out, makers and users, producers and consumers? How will the concept of the user be inscribed or circumscribed in DH’s emergent design? How could we test if an interface is usable? To what extent should users to included in designing and building DH? How participatory and reflexive will that process be?

-

Comment on So the Colors Cover the Wires_Elva Si

The “secret” of interface design is to “make it go away.” – Nicholas Negroponte

Interface design always intrigues me because it could convey the functions and purposes of a product to general users in the most natural, effective yet inconspicuous way. I like how Matt discussed the misconceptions around GUI, which indicated many real-life stereotypes regarding UIUX design. For example, the more colorful, conspicuous, or complicated the GUI is, the less trustworthy it could be in contemporary academic settings. Matt also suggested that although GUI is often not given enough time and effort during the product development stage, it should be an integral part of design. Matt further raised questions like “what role should aesthetics have in interface design?”

Through this article, I learned about how a GUI should

- actively engage users to explore different functions rather than hiding certain tools from the interface.

- give users freedom to pull up and down a “curtain” to find all technical tools (previous/next, comparisons, enlargements, indexes, etc.) Users could arrange, label tools neatly according to their own preferences.

- ensure the main interface clean and simple.

This article provides many key characteristics to consider for our project. When we design the interface for the LGBTQ+ interactive map, we need to balance the ample information and the convinence and efficiency for navigation. We also want to ensure the aesthetic values of this map to encourage constant usage. What are some tools/functions we would love to provide to the users? What should be hidden behind the curtain while others are on the stages?

-

Assignment 20- interface reading- Sally Chen

“Bruce Tognazzini, who has had a distinguished career at Apple, celebrates a visible (as opposed to a merely graphical) interface: “A visible interface is a complete environment in which users can work comfortably, always aware of where they are, where they are going, and what objects are available to them along the way” (Tognazzini 1992: xiii). “

This paragraph is a good overview of some characteristics that a good interface should have, and one of the key points is showing what is available. For our queer map project, the main purpose of our interface is to allow users to quickly acquire up-to-date and detailed LGBTQ-related information. Therefore, we need to find the balance between offering as much detailed information as possible and creating the easiest pathway to a certain outcome (in my mind, it means providing the minimum necessary information for decision-making). For now, the minimum information we provide in the first interface includes the event/location type and location information. Since the final design has not yet been determined, if we were adding a listing feature into the interface, what kinds of basic information would help the user decide whether to click into the events/locations for more information? It needs to be further decided based on design experience and user insight.

-

Reading Assignment: The .textual Condition - Qingyu Cai

The born-digital data and texts are different in scale and complexity, posing enormous challenges for digital archivists and digital humanities researchers. As mentioned in the reading, accessibility to born-digital collections, for instance, is necessary for digital humanities researchers. Since the born-digital data is changing fast every day and even every second, it is essential for them to timely get access to the dataset. In the final project, we have two questions while trying to find collections of Dutch art in the US. The first is access to online digital collections, and the second is the continuously changing dataset of Dutch art collections in the US when new pieces are found, or new donors emerge. The digital dataset, compared to the non-digital one, should incorporate the technology to accustom the features of timely change. Besides, digital archivists and digital humanists both need hands-on retro-tech know-how. Utilizing the tools and technologies learned from the mini-projects in our final project is helpful. And the tools we have learned and used contain various aspects and functions, including web scraping, interactive mapping, data visualization, etc. Without a broader knowledge of tools and hands-on experiences, it is hard for digital humanists to apply theoretical methods into practices and realize the project. However, the emergence of new-generation technologies also brings a variety of complexities and challenges to archival frameworks. Since these technologies are also emerging, some might have limitations and bugs. So, it is also critical to gain the attention of technologists to help improve the stability and features of tools. All in all, we still need to have confidence that new technologies also bring new opportunities for research and experimentation, not just questions and problems.

-

Assignment 20-"So the Colors Cover the Wires"

“So the Colors Cover the Wires”: Interface, Aesthetics, and Usability by Matthew G. Kirschenbaum

This is a classic work that combines the senses of sight, sound and touch. The appearance of the CD Player and the exhaust fan are fused, and you pull the string that hangs down, and the music plays. This straightforward approach establishes a relationship between the designer and the product, and between the product and the user. This is Naoto Fukasawa’s well-known design concept “Without Thought”, which is to design according to the naturally existing user needs. The core of unconscious design is to translate people’s unconscious behavior and intuition into physical products that are visible to the naked eye, and to satisfy needs in the most natural and direct way, as if everything were undesigned. Returning to the origin of things and connecting the design behind the product with the positive use of the product is an extremely high design perspective and dimension. On a mobile app, every UI detail of the visual designer will serve to guide the user. The shading and color contrast of the buttons will tell the user where you want him to click. The typography and font size display of information and text will tell the user what you want him to focus on. This all happens naturally, without the user having to deliberately guess and trial and error, and automatically connects the visual language to the behavior when the interface is presented to them.

-

NLP Tools & Experiments

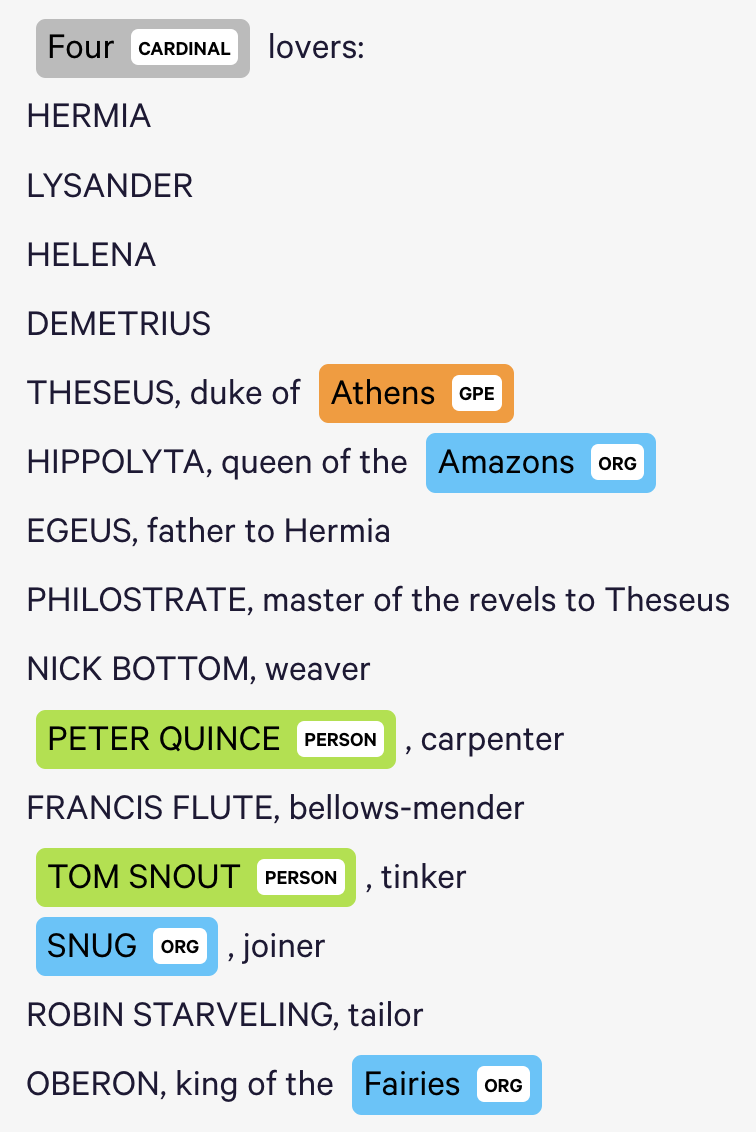

The Named Entity Visualizer provides helpful summaries of term classifications, but its classifications are often incorrect, and it does not appear that we can train its classifiers ourselves within this interface (perhaps we can do so within the larger tool). For example, it uses contemporary term associations to identify entity affilliations, and therefore believes that the textual reference to “Amazons” in this transcript of “A Midsummer Night’s Dream” refers to the organization rather than to a demographic group (see below). The tool also only allows for one entity affiliation for each word, although some words may naturally fall within multiple categories.

JSTOR Analyzer allows us to import text documents and identify topics and people based on prioritized terms whose weights we can adjust (from 1 to 5). Users can also add their own terms to identify the topics and people associated with them. In my case, I used a .txt file of A Midsummer Night’s Dream. JSTOR also provided literature (e.g., essays by Harold Bloom) related to the document I provided.

JSTOR topic graph was not available.

Voyant visualized frequent terms into a word cloud (I assume it has a list of stopwords, because words like “a” and “the” did not appear in the word cloud). Voyant provides a Reader tool that allows users to select individual terms and see the

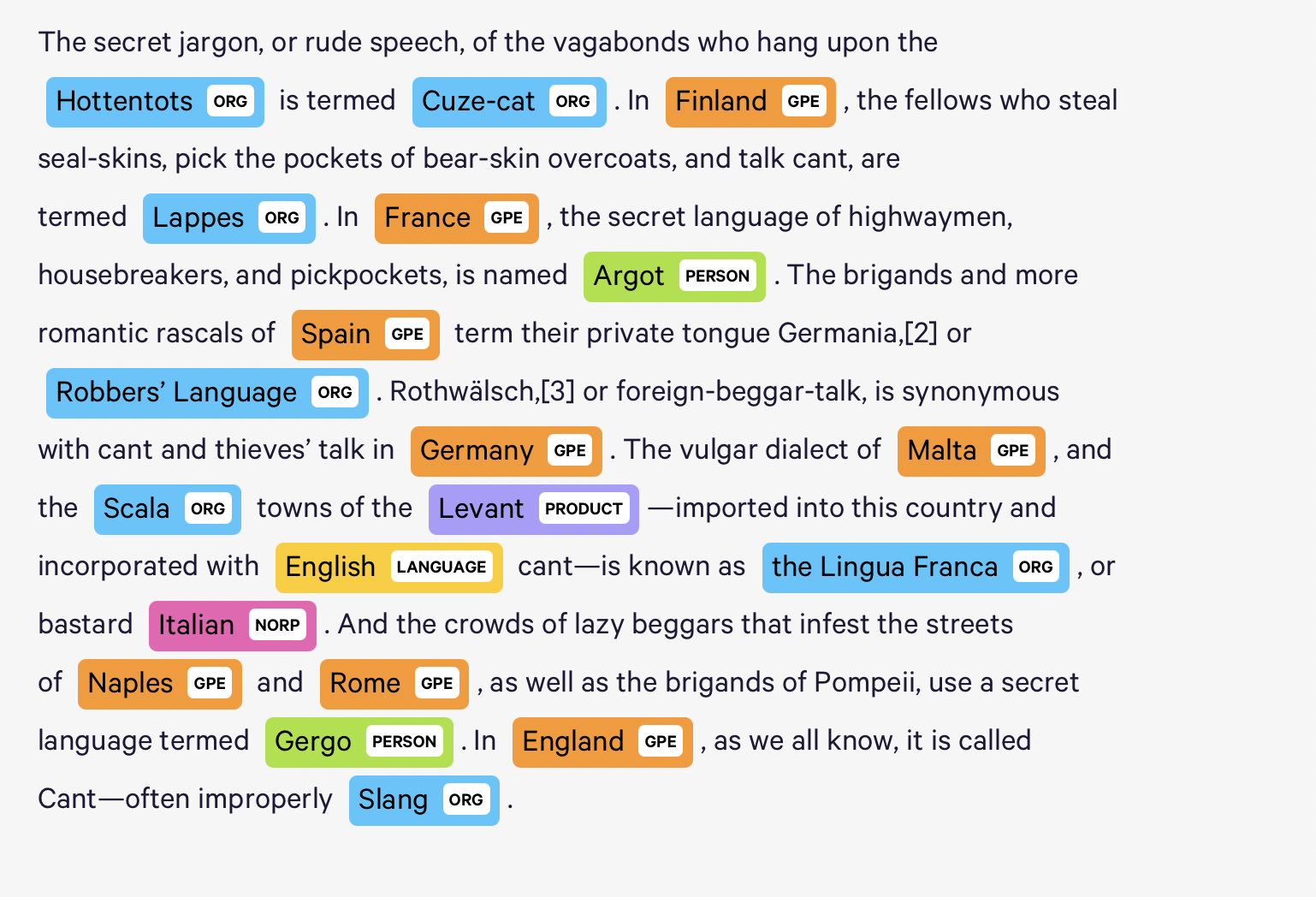

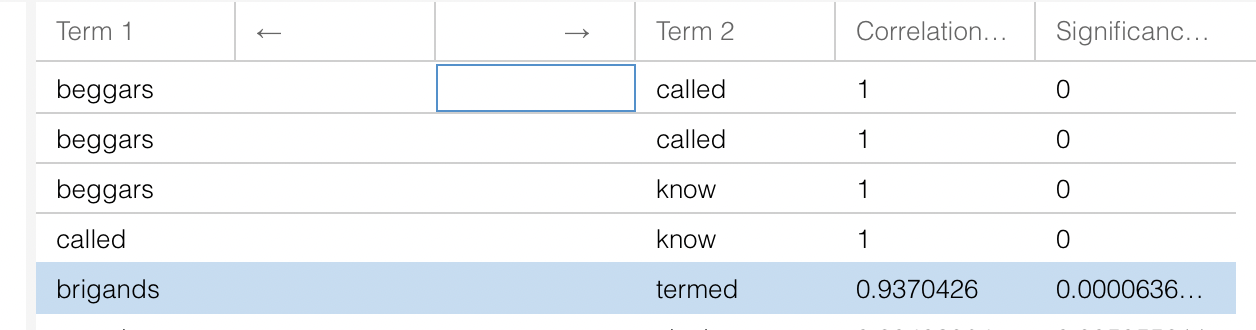

m in a concordance (otherwise, the concordance defaults to the most significant term in the corpus). Voyant returns descriptive counts (number of documents, words, unique word forms, vocabulary density, WPS, readability index, etc.). The readability index is particularly interesting to me. The word cloud can be modified to become a terms list, and links between words can be visualized (very interesting!). The TermsBerry tool allows single words to be visualized alongside words that co-occur with them (I’m not sure that the required distance between the words is for them to be considered co-occurring). URLs can be extracted to show particular views of concordances (as well as other data). Word correlations can also be shown in a bubble line or as a correlations chart that measures the significance of the correlation. The trends tool shows relative frequencies over time. Importantly, you can select a node in the chart and the Reader tool will show you that term at that place in the transcript (so, if a term frequency is increasing, you can then look at that frequency in context). Document terms can also be visualized in list-form.

m in a concordance (otherwise, the concordance defaults to the most significant term in the corpus). Voyant returns descriptive counts (number of documents, words, unique word forms, vocabulary density, WPS, readability index, etc.). The readability index is particularly interesting to me. The word cloud can be modified to become a terms list, and links between words can be visualized (very interesting!). The TermsBerry tool allows single words to be visualized alongside words that co-occur with them (I’m not sure that the required distance between the words is for them to be considered co-occurring). URLs can be extracted to show particular views of concordances (as well as other data). Word correlations can also be shown in a bubble line or as a correlations chart that measures the significance of the correlation. The trends tool shows relative frequencies over time. Importantly, you can select a node in the chart and the Reader tool will show you that term at that place in the transcript (so, if a term frequency is increasing, you can then look at that frequency in context). Document terms can also be visualized in list-form.Summary All of these tools have strengths (I especially like the Voyant tool). But limitations are also present.

-

Experiments With Text Mining

displaCy Named Entity Visualizer

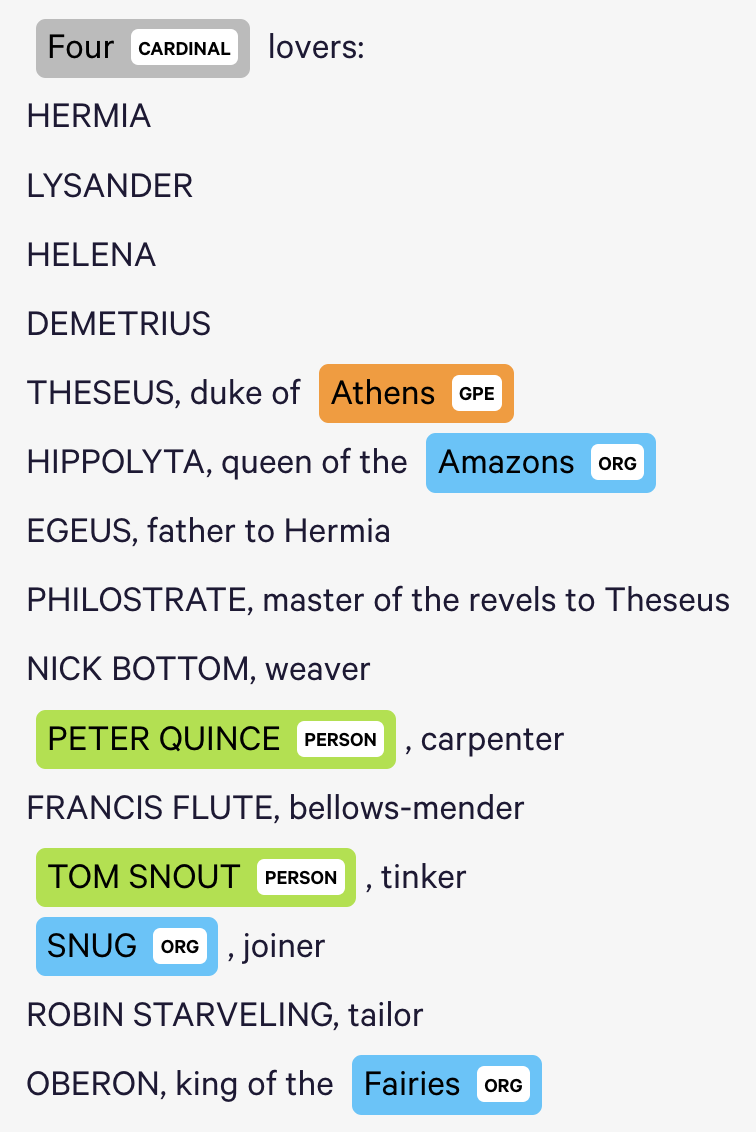

Using the displaCy Named Entity Visualizer, I selected an excerpt from The Slang Dictionary, by John Camden Hotten. I noticed that the tool was able to accurately identify words under categories such as location and geopolitical entity, but seemed to classify a lot of terms under organization, many of which were incorrect. It seemed to make classifications based on the context of the situation or the way the word was presented, even though many words with very different associations are presented in the same way.

JSTOR Text Analyzer

Using the same text as above, this tool gave me reccomendations for other readings based on terms that I’m assuming the tool thinks are most prevalent. Based off of what I had taken from the text, I think the tool did a really nice job at coming up with 5 terms that summarize its main ideas. I was also able to change the weight of each term, I guess if I thought that one or more terms expressed really important ideas in the text.

Voyant

The Voyant tool found which words were the most common in the text and displayed them in the form of a word cloud. This could be great for seeing commonalities between different texts. There was also a section titled correlations. This showed terms that were in some way connected to each other. I’m not sure if this was based on definition or if the tool was able to recognize that when one term was used, it shortly after made reference to the other term, but regardless shows how certain pieces of information can be linked to another

-

Mar1 Hearst: “What is Text Mining?” - joshua mbogo

A New Post

After reading the articles I think it is interesting to look at how Hearst explains the problem of text mining and overall natural langauge processing along with the limitations of the technology of the time. Keeping in mind that the first article read was published in 2003 the models they had could only process text for small phrases and had a very hard time comprehending full though forms in the text. I think this is a great reminder of the complexity of language and the human mind that comprehends and creates language. Additionally, it is interesting to note that unlike many forms of computer analysis that tries to find trends text mining is trying to find new information rather then parse for trends in exisiting data. The creation of new information also known as comprehension and understanding is a very human idea so to see it in computers is a very promising but frightening realization that scientists are continuing to develop. Text mining in this right was the forefront of computer inteligence and has continued to help scientist understand the artificial mind as we use it to understand our own. Enter text in Markdown. Use the toolbar above, or click the ? button for formatting help.

-

Comment on Text Mining / Natural Language Processing_Elva Si

I still remembered the very first class when we talked about identifying the same word being used across different editions of Shakespeare’s work over decades. Now I know such activity as the text mining approach. It could help humanists link extracted textual information from different written resources to form new facts or hypotheses. I think this is a powerful approach since it allows researchers, scholars, experts, and more to look beyond single resources, enable a comprehensive view, and discover new patterns. I like the example Hearst (2003) used regarding using text to form hypotheses about diseases. While doctors can only read a small set of literature in their particular field, it would be beneficial if text mining, this conputational technique to process texts over a much larger pool of literature, could be used to search across different medical fields and help generate new, potentially plausible medical hypothesis, alongside the doctors’ medical expertise. I can imagine the scope of impact if such approach could be widely utilized in the medical industry. I also believe in such approaches to produce large impact in more fields, including but not limited to academia, news report, history. Yet, , the text mining approach will also face several challenges. Out of all challenges, “information one need is often not recorded in textual form” is what I considered the most prominent one. In an increasingly digitalized world, important information will be documented beyond texts; in videos, podcasts, and spoken conversations. How could computitional techniques access and interpret these non-textual formats? How could technologies help people examine both textual and non-textual information altogether?

-

Comment on Raw Data Is an Oxymoron_Elva Si

Raw data, as Gitelman suggested, has “understandable appeal.” When people collect a large number of data, they feel empowered to compile, store, manipulate, transmit, and INTERPRET data to their own understanding. The raw data is straightforward, comprehensive to some extent, transparent and self-evident. It is difficult to ignore the beauty of raw data yet remember the interpretive base of the raw data . This article reminds me of the data collection process we are currently doing for the LGBTQ interactive map. For the first glance, it would be pretty impressive to observe the 170 survey responses being collected over just one week. Yet, we still need to remind ourselves constantly that our interpretation of the raw data could be subjective.

At the same time, I also enjoy reading Gitelman’s perspective on 1. the concept of “raw data” could be limited for different disciplines, especially the literacy and humanistic fields. 2. the concept of “raw data” fails to address the non-English world where data could be understood and interpreted differently.

-

Assignment 18

1.In your comments on GitHub, discuss how these text-mining approaches have become a standard in today’s computa1onal techniques in processing texts and the potential (and the shortcomings) that was expected but not necessarily been realized or superseded by novel/different approaches.

The three texts introduced the field of text data mining and how it could be implemented in real life. Text Data Mining is helpful in many fields, such as in bioscience, where scientists can extract patterns and deduct hypotheses about the disease from a combination of text fragments in biomedical literature titles. Moreover, text data mining could help us uncover social impact. As we can see from our classmate Daniel’s group’s approach– deriving trends in social attitudes about Russia/Ukrain issue by analyzing textual patterns from Twitter memes.

However, text data mining also has its own limitations: it couldn’t represent all the information and knowledge beyond the communication of texts. For example, many types of knowledge are conveyed through other media like graphics and sound. Text data mining couldn’t translate those forms of media, therefore, it might cause misunderstanding in interpreting specific topics that can only be fully comprehended through those media. Furthermore, text data mining couldn’t uncover the decisions and thought processes behind the door; the textual data is just a lower-level data identification and organization. It still depends heavily on human cognition to link different sources and form hypotheses based on the processes of textual data.

2.Read Lisa Gitelman’s (ed.) “Raw Data” Is an Oxymoron, Introduc1on (Cambridge, Mass, MIT-Press, 2013, 1-14) and comment on the often used concept of “raw data.” (text on Canvas)

There’s never anything about ‘raw’ data. All the data we are exposed to have already been ‘cooked’ – they have been processed and interpreted by different professionals in different disciplines through their own methods. All the data are not isolated; they are all contextualized and stored within a social-cultural context. For example, the language that records the data already contains the cultural construct that adds one more layer to the ‘original’ data.

The article also mentions the term data aggregation – the overlay of operations that renders data’s value also has impacts. The steps of different levels of obtaining the data sets, from data gathering to cleaning to analyzing, are all embedded with the researchers’ interpretations and assumptions and are relatively biased. For example, changing one filter in the algorithm model would produce different results. Maintaining the consistency of research methods to get stable data is crucial.

-

Assignment 18-Text mining reading

These articles are all based on western language systems, so I was curious about how it can work in Chinese. I am suprised to find that there are also very many uses of text mining based on Chinese. For example, the keyword composition of Chinese Tang poems and Song lyrics mapped by Zero E-lab research lab some time ago is very interesting, capturing all the high frequency or more trendy words of ancient poems. And the connection between each keyword is clearly shown by using the method of network analysis diagram, and even some readers are able to deduce some classic poems by themselves according to that network diagram. However, it is not so simple to deal with the subtext of ancient Chinese (literary), especially poetry, because single-word words account for more than 80% of the statistical information of ancient Chinese vocabulary, plus the fact that ancient Chinese has a great deal of meaning in small words, so the subtext technique for modern Chinese is often not applicable to it. In the analysis process, the results are only the “introduction” and “clues”, the most important thing is to rely on the human brain to analyze the results, with the help of the background/business knowledge and analytical models, from the surface of the text to its depths, to discover the deeper meaning that can not be grasped by the shallow reading, to explore its value.

-

Text Mining & Raw Data Readings

Notes on Text Mining Readings:

How these techniques have become standard:

- As defined by Marti Hearst (“the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources”), text mining has certainly become a standard method of data extraction. His “linking together of extracted information” is reflected in the natural structural format of the extractions (e.g., JSON, CSV), which acts as a sort of dictionary to provide multiple levels of metadata.

- Hearst imagines text mining as primarily useful when we don’t know much about the information we’re planning to extract: but this is not always the case. Today, we often mine text about which we know very much, but whose specific details we don’t have in a manner conducive to our research. For example: one can go to a website and, depending on the situation, see the pertinent textual data there – but using the browser interface alone, and without text mining, this data can’t always be formatted in ways that allow for the desired analyses. Then again, Hearst wouldn’t purely call this “‘real’ text mining… that discovers new pieces of knowledge.”

- What Hearst earlier called text mining has now become an essential element of textual analysis within natural language processing. Today, “extraction” and “analysis” are sometimes combined within a single function, which leads to a blurring of the lines between these disparate tasks. In the example of topic modeling, for example, this process has been merged. Visualizations, and references to external libraries that allow any number of advanced functionalities, have also been incorporated within individual Python files. Topic modeling and other NLP methods also introduce far more advanced methods of organizing information into n-dimensional matrices that were not envisioned as components of text mining. Part of the job of text mining, after all, is to organize the text into discrete units that can be called upon when needed.

Potentials or shortcomings that were envisioned, but wasn’t realized, or were superseded:

- Text mining was envisioned as entirely separate from search tools. But it can still be used to conduct advanced searches of websites and web-based databases.

- Text mining was also envisioned as separate from (non-textual) data mining, but today, the same processes may be used for both, depending on the tool or combination of tools (e.g., API, Python, etc.).

- Some of what Hearst defines as text mining now falls under the umbrella of psycholinguistics (e.g., LIWC), although it performs similar functionality.

Notes on Raw Data Reading:

- As suggested in earlier readings – through which we learned that no data is “given” – here, we observe that no data is truly raw. All data is constructed and selected. All data is “cooked” to one degree or another.

- Here, we can see that depending on the discipline, data is cooked differently; it is extracted, shaped, and interpreted differently. Even data within fields can be cooked differently (as illustrated by the author’s example of varying versions of Milton poems).

- As the author notes, “data need us.” Data does not exist without human extraction, observation, analysis, composition. The fact that data, the plural of datum, is used more commonly also illustrates the widespread need for multiples in our data: not only does data need us, but we need data, and to accomplish all manner of tasks, we need as much data as we can get. That said, a unique distinction of Gitelman and Jackson’s is interesting to consider: “The singular datum is not the particular in relation to any universal (the elected individual in representative democracy, for example) and the plural data is not universal, not generalizable from the singular; it is an aggregation. The power within aggregation is relational, based on potential connections: network, not hierarchy.”

- Usability is also addressed in this chapter. Notably, data must be usable for the duration of a study, and for future researchers. This means that data must be “as raw as possible,” in a sense. Otherwise, it may be ideal for it to be annotated in such a way that its “original” can be extracted and used in a new context.

-

Reading Assignment: Text Mining/Natural Language Processing - Qingyu Cai

The three articles introduce the definition of text mining, its features, and different specific techniques. The most significant use of text mining nowadays is the search engine. When users type keywords in the search bar, the computer system uses text mining to collect related information. Furthermore, the current Google search not only includes the technique of text mining but also information in various formats, including images, videos, and shopping links. Text mining uses the topic-modeling to identify related texts, while image search utilizes AI technology to define elements and colors of pictures to collect similar images. Besides, text mining can also be used in customer service to gather consumer feedback and get to know their main pain points. By analyzing the keywords of customers’ feedback, the company can quickly learn what consumers are unsatisfied with to come up with instant solutions. Although letting consumers grade the service by rating can also showcase the level of satisfaction, companies still need more information about the reasons behind the rating. In terms of the shortcomings of text mining, just as mentioned in the reading named Alien Reading: Text Mining, Language Standardization, and the Humanities, computers are not able to account for the nuances of literary language due to the inability of sentiment. Computers can quite well understand scientific texts without much sentiment. However, in some types of literacy, words can not be interpreted without understanding the whole context. As a result, as mentioned in the reading called Untangling Text Data Mining, we do not need wholly artificial intelligent text analysis; rather, a mixture of computationally-driven and user-guided analysis may open the door to exciting new results.

-

Reading Assignment: Raw Data is an Oxymoron - Qingyu Cai

Raw data seems to be literally. As mentioned in the reading, the author says, at first glance, data are apparently before the fact: they are the starting point for what we know, who we are, and how we communicate. This shared sense of starting with data often leads to an unnoticed assumption that data are transparent, that information is self-evident, the fundamental stuff of truth itself. However, raw data depends on culture, not just itself. It is the culture that interprets and imagines the meaning of the data. Raw data is not a natural resource. Instead, it is generated, gathered, and interpreted by culture. Based on different usage, data can be “cooked” differently. As a result, raw data embeds assumptions. In order to learn and use raw data well, it is crucial to promote interdisciplinary research.

-

Assignment 18- data mining- Sally Chen

Data mining is the process of discovering patterns in data, and the main approaches include clustering, association, word cloud, etc. These methods are based on the results of human cognitive science research that has been conducted, and common human cognitive strategies are simulated by computer algorithms. I think the similarities between the algorithms and human cognitive strategies are the reason they stand out as the most commonly used methods- because the algorithms are more likely to come out with “human-like” insights and decisions. However, the results of data mining are necessarily different from the results of human thinking, especially when academia has not yet fully researched the psychological mechanisms of more complex psychological processes, e.g. common sense and creativity. One of the major advantages of data mining is that it helps to answer questions we did not know to ask, especially to obtain some insights that may be counter-intuitive or counter-conventional. In addition, the interpretation of the results and the decision-making process still require human involvement. For example, there are many parts of the text analysis process for large samples that need to be done manually, and these operations may indirectly affect the results of data mining. The biggest problem of data mining in humanities studies is that there is a fundamental difference between computer programs and human cognitive processes. Although computer programs are designed based on human cognitive strategies, they do not fully simulate human thought processes, which may lead to biased results. The most typical problem is that human analysis of “topics” is not based on the frequency analysis and combination of individual words, but also on the context in which the words occur, which is not taken into account in the frequency calculation by the computer. Other challenges include the accessibility of certain databases and the non-transparency of data mining algorithms. Some databases are not always accessible to scholars, so data mining analysis may be potentially biased within the scope of available data.

-

Assignment 18- raw data- Sally Chen

According to Wikipedia (https://en.wikipedia.org/wiki/Raw_data), raw data is considered a relative term, because even once raw data have been “cleaned” and processed by one team of researchers, another team may consider these processed data to be “raw data” for another stage of research. An example I can think of is the authors of individual experimental research and meta-analysis. For experimental researchers, the “raw data” is all the variable data they collected from subjects, and for the authors of meta-analysis. For experimental researchers, the “raw data” is all the variable data they collected from subjects, and for the authors of meta-analysis, the “raw data” is all the processed data provided by the experimental researchers. The concept of “Raw data” is useful while misleading. On the one hand, the concept emphasizes that the data are raw and unprocessed for the data user, and that future processing is based on raw data. This concept establishes that subsequent data interpretation and visualization are eventually based on this version of data. In addition, the concept of “raw data” is misleading. In addition to the relative nature of “raw”, another major problem is the assumptions that exist in data collection and management. As mentioned in the article Why Data is Never Raw (https://www.thenewatlantis.com/publications/why-data-is-never-raw): “as we’ve seen, even the initial collection of data already involves intentions, assumptions, and choices that amount to a kind of pre-processing”. The purpose of collecting and managing data is to make interpretations and inferences, which inherently determines the methodology of data collection, including what is measured, how it is measured, how it is collected, where it is collected from, and how it is recorded. With these limitations, the inferences we can make are inherently limited, and there is the problem of not fully reflecting on reality. This problem leads to a contradiction in the objectivity and absoluteness implied by the concept of “raw data”.

-

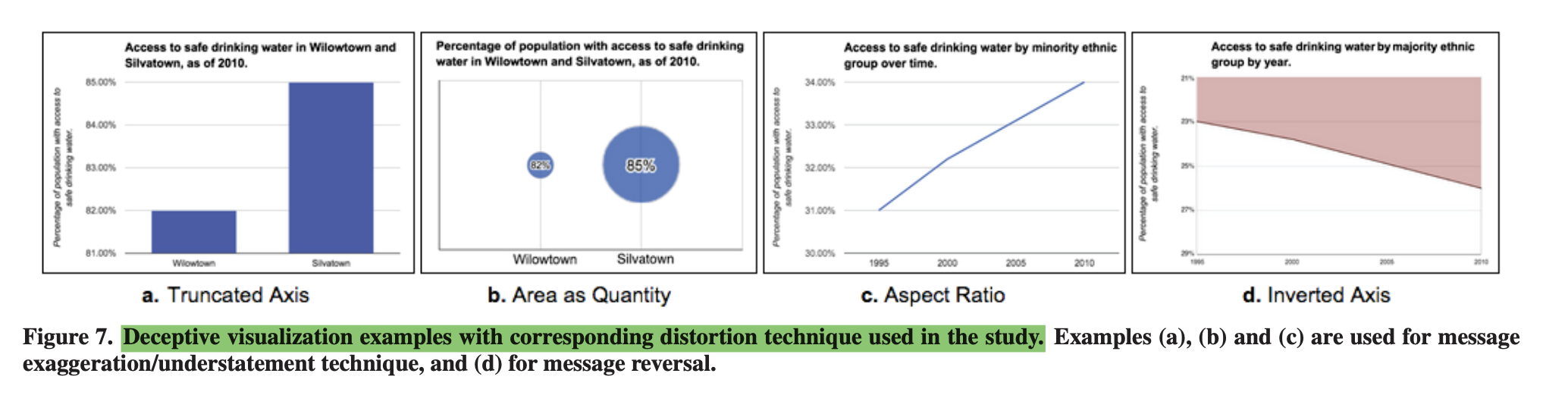

How to Lie with Data Visualization & How Deceptive are Deceptive Visualizations? An Empirical Analysis of Common Distortion Techniques

Concerning the two texts, it is interesting how deceptive figures can be when handling objective data and statistics. Although the two texts point out that figures should be made to elimitae message reversal and message exaggeration, some points of contention came to mind as to seeing these fake statistics. The first being that what seems like exaggeration is in actuality the true message. Sometimes statistics are made on the fly or on a whim, especially in the realm of sports, and the need to pooint out outliers is crucial to the audeince. For instance, a statistc that creates an average of 75% on a chart, needs to “embellish” viewing a 60% on the same chart - if you regularly have the axis out of a 100 then you would not necessarily see the outlier. The second point is who is the fault on when the message is viewed wrongly despite being given all the data. If the graph was presented without features, then the fault would objectively be on the graph maker, but if having all the labels and features, the person still makes an exaggeration then it is on the fault of the audience, and how short their attention span is. Unless what the writers are advising is to to present information as if the audience has no deductive reasoning skills, then that is another area of conversation.

-

Comments on readings about visualization deception

Ravi Parikh’s “How to Lie with Data Visualization”

- General Comments

- I’m surprised by the pie chart that doesn’t add up to 100%. This reminds me of some interesting chapters in “Storytelling with Data” by Cole Nussbaumer Knaflic about both 3D visualizations (how “warped” perspectives and focal points make some figure appear more relevant than they really are, and others less relevant) and pie charts (people tend to overweight different colors and slices in relation to one another, based on visual hierarchy, etc.).

- It seems that deceptive visualizations are being used commonly in very large-scale information reporting systems (e.g., Fox News, sports, business communications), and so we might consider that they are incentivized if they can lead to particular outcomes. How can we de-incentivize such visualizations, especially since there do not appear to be any strong legal boundaries to their propogation? - Most Deceptive Techniques

- Manipulating axes (y) to shape viewer’s opinions. There was a similar example recently with housing market pricing (I think in WSJ), but they tried to do the opposite (show that prices weren’t rising as much as they really were) by excessively inflating the y axis.

- Cumulative graphs: they’ll naturally make it seem like your numbers are going up because that’s how positive number cumulations work. Every new number added to the chart includes its predecessor, so the numbers have to go up, which warps how we view, for example, revenue growth.

Anshul Vikram Pandey’s “How Deceptive Are Deceptive Visualizations?”

- General Comments- I’m particularly interested in the authors’ classifications of deceptions as either message reversal (“what”) or message exaggeration/understatement (“how much”). These seem very useful metrics when looking, also, at other forms of visual communications that might not be seeking to communicate or translate data through scale (e.g., memes, videos). These two designations also, essentially, tell us the subject and the scale of a subject’s value(s), which seems to be a framework that could be applied widely.

- For their definition, I assume that “actual message” refers to the true numerical value or meaning, of data, rather than to a subjective interpretation of data: “[A deceptive visual is] a graphical depiction of information, designed with or without an intent to deceive, that may create a belief about the message and/or its components, which varies from the actual message”. - Most Deceptive Techniques

- For me, the inverted axis is the most upsetting visual deception technique mentioned here, because it uses our implicit assumptions, which are needed for ease of data access and interpretability, against us. There are few things within our everyday visual calculations that we might assume more readily than “up = more” and “down = less”, for example; this has even made its way into human gesture and body language.

-

Assignment 17

Data visualization is usually biased and coded with the author’s intent of what messages would like to be conveyed. Deception in data visualization happens on many levels, from how the reader reads the data to how they interpret data. Message exaggeration is apparent in our daily life, e.g., how Tim Cook presents Apple’s cumulative sales data without having a precise quantity scale for the y-axis. Depending on what unit the y-axis is, the visualization would be very different (billions of iPhone sales vs. hundreds of iPhone sales). The exaggerated graph misleads users to think iPhones sales are increasing because cumulative data is always increasing, but in reality, iPhone’s quarterly sales are decreasing. Message reversal is also confusing to inattentive readers (e.g. the Gun Death in Florida chart). The author takes advantage of readers’ habit to read from bottom to top and purposefully reversing the measurement to decrease the negative response to the data shown. As designers, when we visualize data, we must be careful with the methods and type of measurements we use to provide an unbiased data analysis. We must also consider readers’ digital literacy and familiarity with the data context.

-

Reading Assignment - Deceptive Visualizations: Qingyu Cai

I find the inverted axis as the most deceptive technique. Firstly, this technique ignores the convention, and readers won’t realize the trick without being reminded. The first time I read the short passage by Ravi Parikh, I was treated by the graph the author showed. Not until the author mentioned that the graph’s Y-axis was upside-down did I realize that I had misunderstood the chart reversely. Secondly, the impact of the convention is much more substantial than we have imagined because people accept it as always-correct principles, which is hard for people to change this mindset. For instance, even if readers realize that the Y-axis is upside-down, it’s still hard for us to accept this representation. Finally, the message created by the inverted axis is reversal instead of message exaggeration or understatement, which means that it totally changes the message’s meaning. As a result, readers would interpret the meaning wrong without noticing the axis.

-

How to Lie with Data Visualization - Joshua Mbogo

A New Post

Out of the list of ways to lie with data visualization almost all of them had a overlying theme. The main way to lie is to skew or misrepresent data through the use of poroptions and illogical graphs. When thinking about the Y-axis putting the y-axis in a scale that is not proper for the data being discuss will leave the data either showing an exaggerate or underwhelming result. Additionally, in some cases graphs must use a logarithmic scale and if the wrong log is used such a scneario can also occur. Another thing I noticed was using the wrong kind of data difference between actual and cumlative data will produce a false sense of increase if the user doesn’t properly digest the graph and its content. With a cumlative graph one must focus on the change of the slope and not the actual value of the slope will in a normal traditional graph looking at the specific values gives you information of how the data is change over the x-axis (which in the example presented in the article was time). Enter text in Markdown. Use the toolbar above, or click the ? button for formatting help.

-

Assignment 17- Sally Chen

1) The most common and deceptive representation is probably changing the y-axis. It also seems to have the advantage of magnifying significant effects or significant differences in academia and is considered a reasonable operation to make statistical graphs more “readable”. However, this can lead to some difficulties for researchers when making cross-study comparisons because the y-axis is not uniform. Compared with tables that provide direct numbers, charts can have a lot of space to do deceptive techniques.